AI Industry Super PACs: The $100M Battle Over AI Regulation [2025]

When a New York state legislator introduced a bill requiring major AI developers to disclose their safety protocols and report serious system misuse, nobody expected it would spark one of the most bizarre political showdowns of 2025. But that's exactly what happened when Alex Bores sponsored the RAISE Act—and suddenly found himself at the center of a multi-million dollar battle between competing factions of the AI industry itself.

This isn't your typical political fight. It's not about left versus right, or even about which tech company dominates the market. It's about something far more fundamental: how much should AI developers have to tell regulators and the public about what their systems can do, and how they might go wrong?

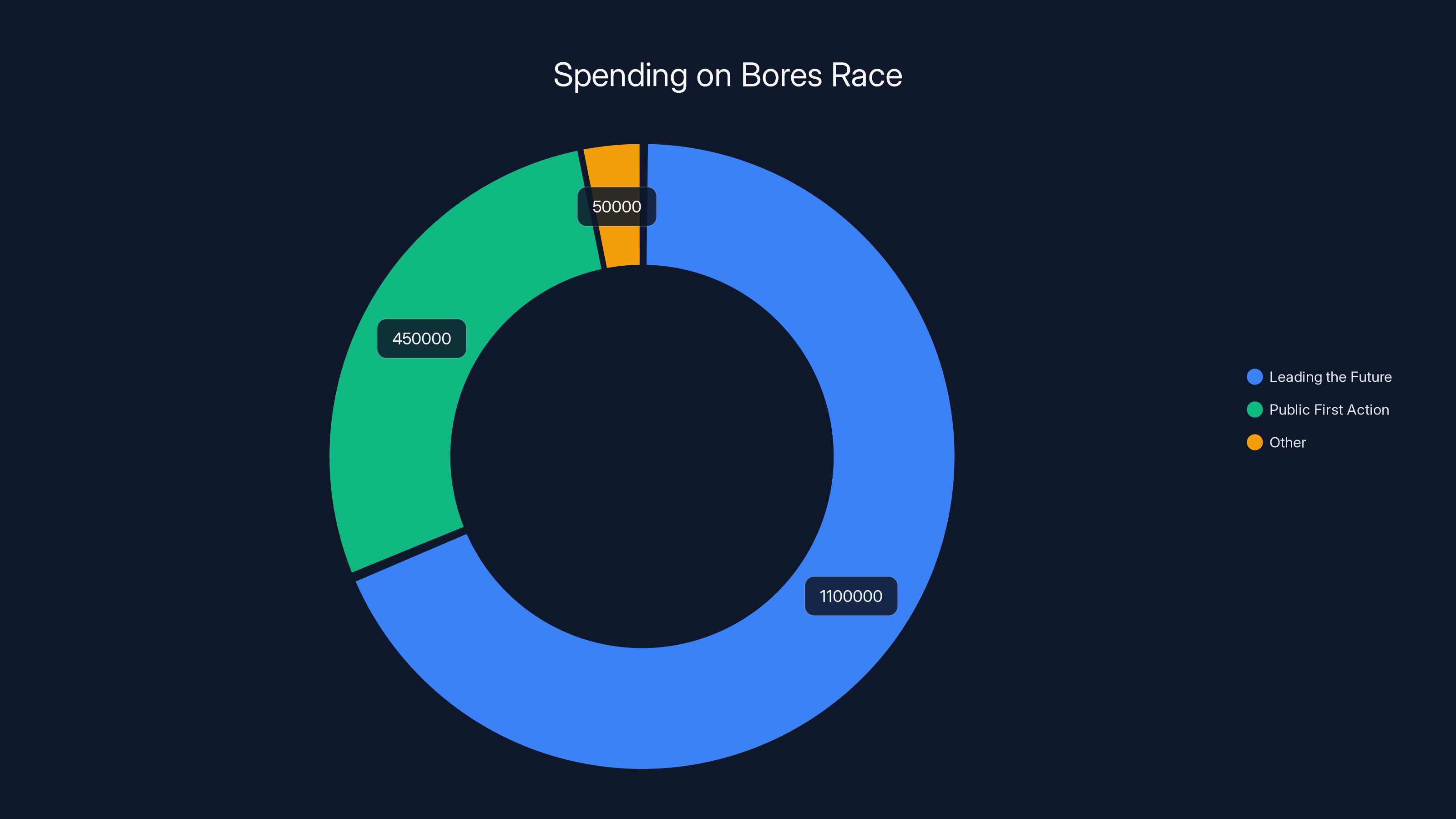

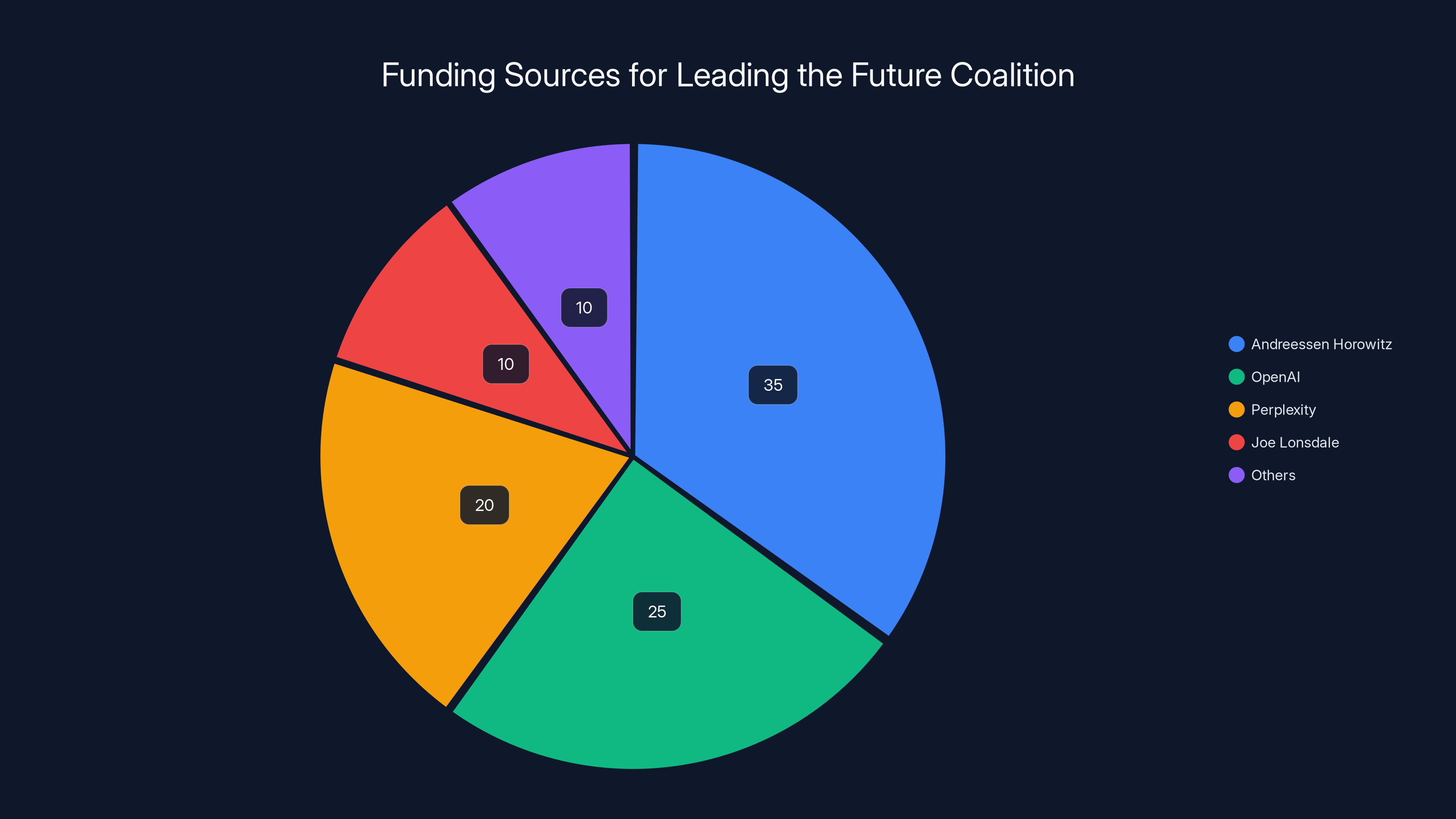

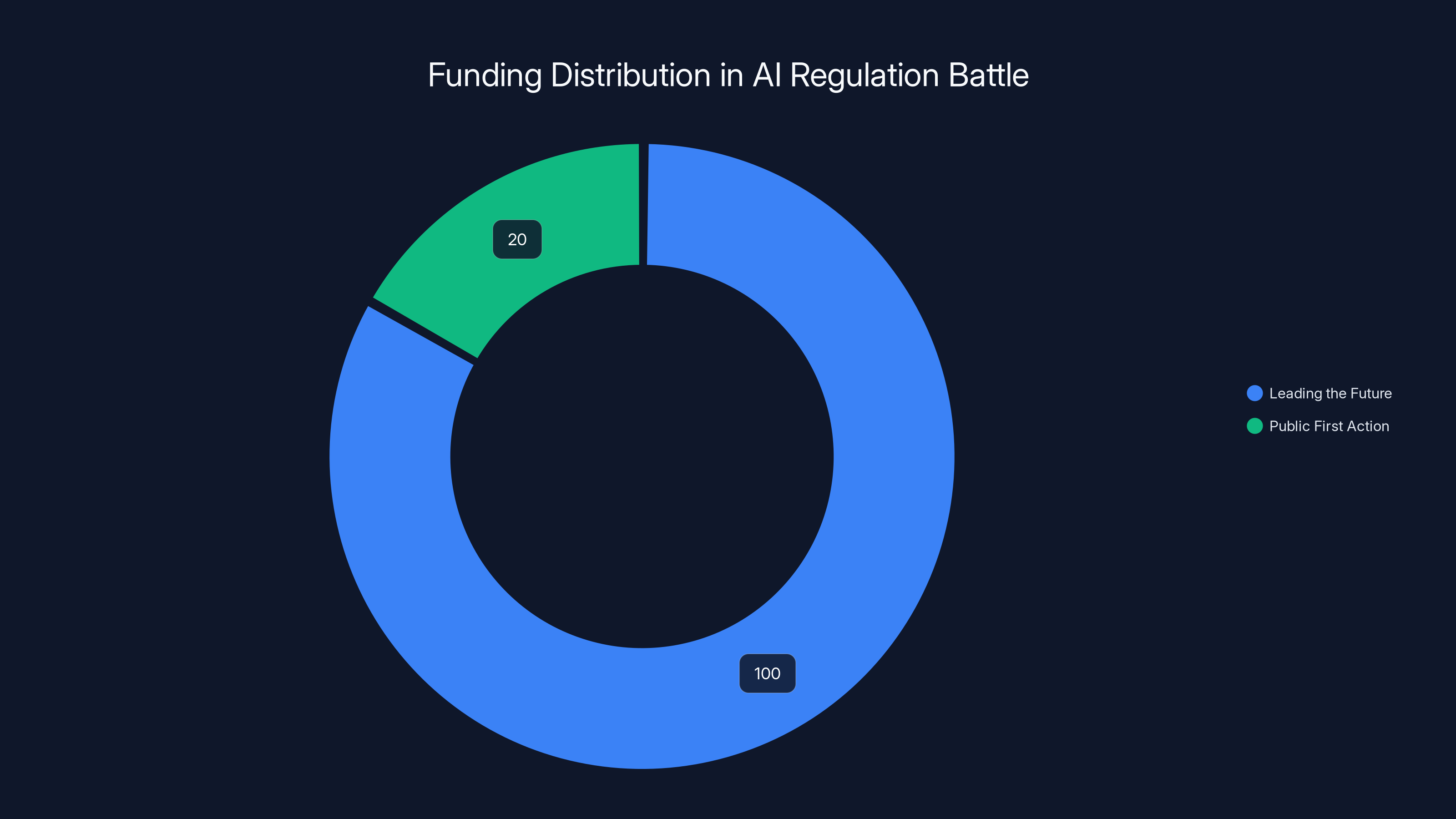

On one side, you've got Leading the Future, a pro-AI super PAC armed with more than $100 million from heavyweight backers including Andreessen Horowitz, OpenAI President Greg Brockman, AI search startup Perplexity, and Palantir co-founder Joe Lonsdale. They're spending big to take down Bores, viewing his RAISE Act as an existential threat to innovation.

On the other side, Public First Action—backed by a

What makes this fight so significant isn't just the money. It's what it reveals about the fracturing consensus within the AI industry itself. For years, tech leaders spoke with one voice about the need for minimal regulation and maximum speed-to-market. Now, they're openly fighting each other in the political arena over fundamentally different visions of responsible AI deployment.

This $1.5 million+ battle in a single New York congressional district is a microcosm of a much larger question: What does responsible AI governance actually look like? And who gets to decide—the companies building the technology, or elected officials accountable to voters?

TL; DR

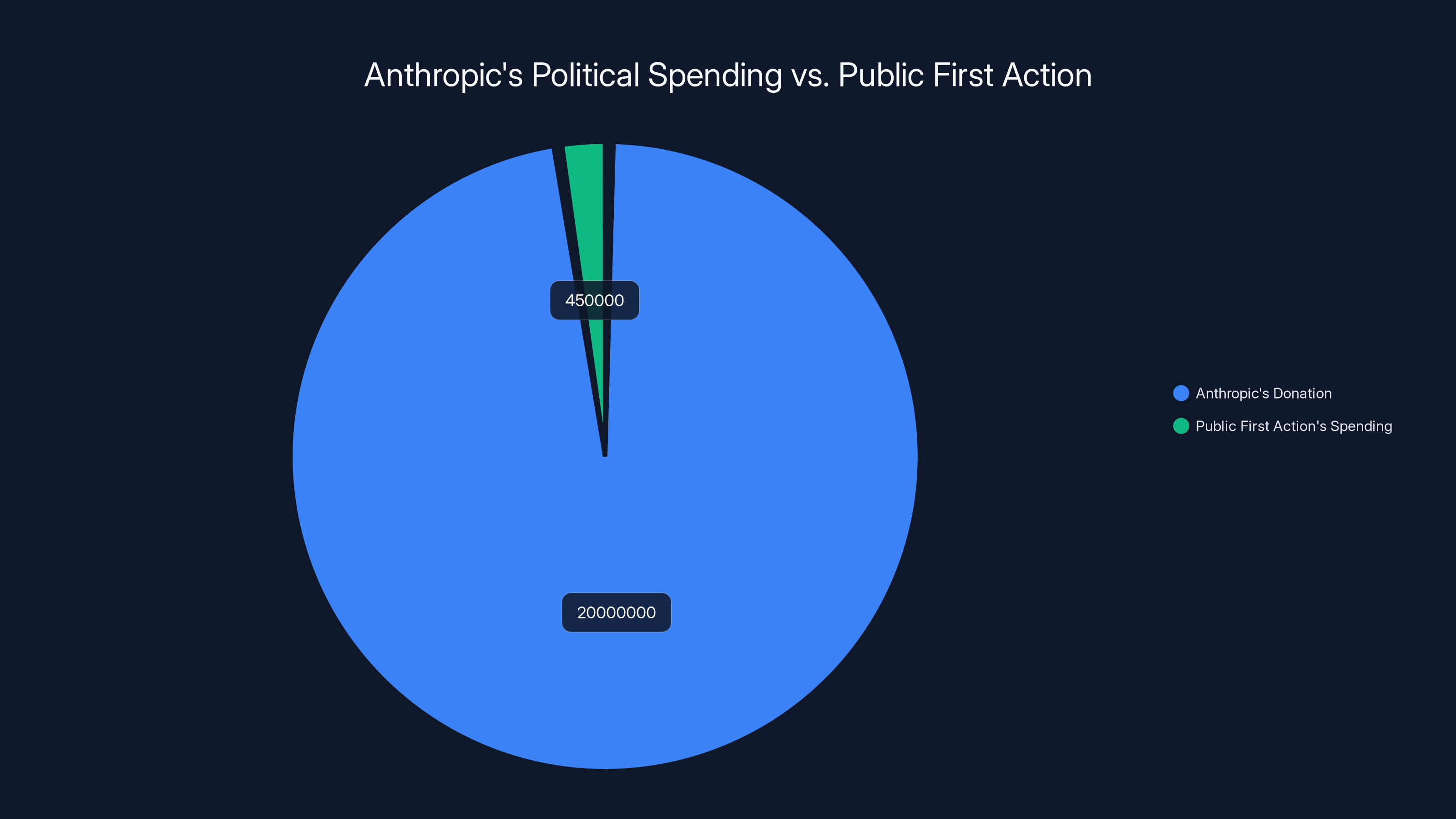

- Super PAC Spending: Anthropic-backed Public First Action is spending 1.1 million attacking him

- Core Issue: The RAISE Act requires AI developers to disclose safety protocols and report serious system misuse—a transparency requirement that divides the industry

- Industry Split: Andreessen Horowitz, OpenAI, Perplexity, and Palantir oppose the measure, while Anthropic backs it with $20 million funding

- Unprecedented Scale: This is the first time major AI companies have funded opposing super PACs to fight over regulatory policy

- Broader Implications: The battle signals a fundamental divide in how the AI industry views safety, transparency, and government oversight

Leading the Future has spent over

Understanding the RAISE Act: What Bores Actually Proposed

Before you can understand why the AI industry is spending millions to either support or defeat Alex Bores, you need to understand what the RAISE Act actually does. It's not some radical proposal to shut down AI development or impose burdensome restrictions on innovation. But it does something the AI industry fundamentally disagrees about: it requires transparency.

The RAISE Act—standing for Responsible AI Systems and Evaluation—has three core components. First, it requires major AI developers to disclose their safety testing protocols. Not trade secrets or proprietary models, but the basic methodologies they use to identify and mitigate risks before deploying systems to millions of users.

Second, it mandates that developers report serious misuse of their systems to state authorities. If someone uses your AI model to commit fraud, launch a cyberattack, or create non-consensual intimate imagery, you're required to notify regulators. This isn't hypothetical—these harms are already happening, and there's currently no mechanism for regulators to even know they're occurring.

Third, the act establishes a registry of AI safety incidents. Similar to how the FDA maintains a database of adverse events for drugs and medical devices, New York would maintain a public-facing database of AI misuse incidents. The data would be aggregated and anonymized, but regulators and the public would have visibility into which systems are causing problems and at what scale.

On paper, this sounds reasonable. Most other industries operate under similar transparency requirements. Pharmaceutical companies disclose clinical trial protocols. Autonomous vehicle makers report safety incidents. Airlines document maintenance issues. But the AI industry has argued for years that AI is different—that innovation moves so fast that regulatory oversight would slow it down and cede leadership to other countries.

What's interesting is that Bores himself isn't an anti-tech crusader. He's been supportive of AI development in New York. He just thought that safety transparency and incident reporting were reasonable guardrails that wouldn't actually inhibit innovation. In his view, if you're confident your safety protocols are sound, why wouldn't you be willing to disclose them?

That question has now become the flashpoint for a $1.5 million proxy war.

Leading the Future: The $100M Opposition Coalition

Leading the Future isn't your typical single-donor super PAC. It's a coalition of some of Silicon Valley's most prominent investors and AI executives, all united by the belief that the RAISE Act would damage American AI competitiveness. The group has raised over $100 million, making it one of the best-funded advocacy organizations focused specifically on technology policy.

Andreessen Horowitz is one of the major backers, which makes sense given the firm's massive bets on AI companies. The venture capital firm has poured billions into AI startups over the past two years, and any requirement that these companies disclose safety protocols or report incidents would directly impact their portfolio companies' compliance costs and operational complexity.

Greg Brockman, OpenAI's President, has personally backed the effort. This is significant because it shows OpenAI's leadership isn't just opposed to the measure in theory—they're actively funding opposition campaigns. For a company that has positioned itself as safety-conscious and responsible, this sends a clear message about where their priorities actually lie when regulations threaten their business model.

Perplexity, the AI search startup that's raised hundreds of millions in venture funding, is also backing the effort. The company's business model depends on rapid deployment of search and synthesis capabilities. If every deployment required safety disclosures and incident reporting, it would add friction to their development cycle.

Joe Lonsdale, Palantir's co-founder and a prominent voice in Silicon Valley, has also committed funding. Lonsdale is known for his libertarian views on technology regulation and has been vocal about the dangers of what he sees as regulatory overreach.

The super PAC's strategy has been straightforward: flooding the market with attack ads against Bores. As of February 2025, they've already spent $1.1 million in a single congressional district. These aren't subtle ads either. The messaging focuses on painting Bores as anti-innovation, anti-business, and hostile to the industry that drives New York's economy.

What's telling is the scale of the spending. A congressional race in New York's 12th district wouldn't normally attract more than a few million dollars in total spending. But Leading the Future's $1.1 million in attack ads alone makes this one of the most expensive races in the country on a per-capita basis. That's a sign of just how seriously the AI industry's major players are taking the RAISE Act threat.

The coalition's messaging also reveals something important: they're not arguing on the merits of transparency. Instead, they're using broader arguments about innovation, competitiveness, and job creation. The implicit message is that any regulation—even reasonable transparency requirements—will chase innovation to other countries and cost jobs.

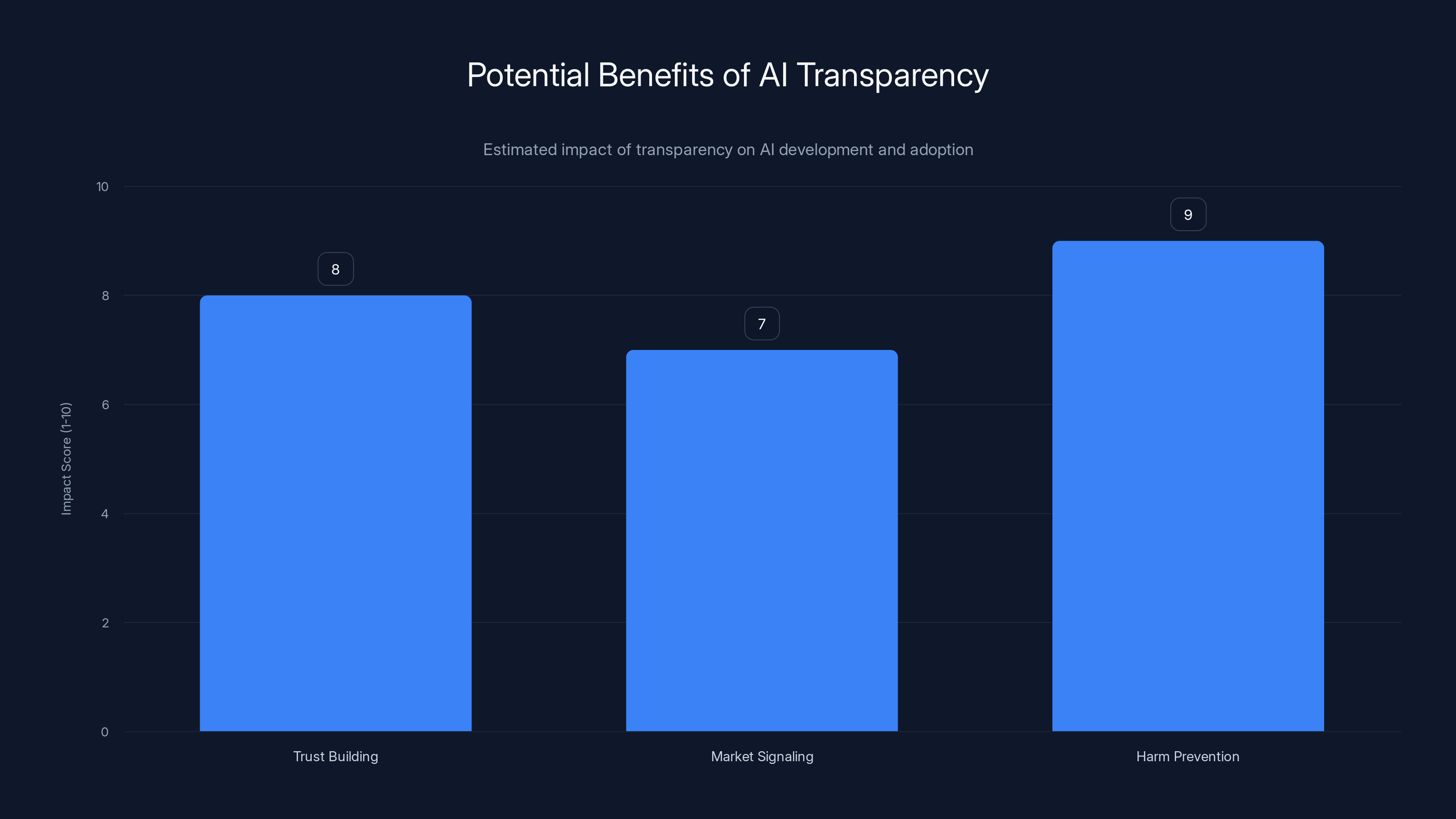

Transparency in AI can significantly enhance trust, provide market advantages, and help prevent harms, with estimated impact scores of 8, 7, and 9 respectively.

Anthropic's Counteroffensive: Public First Action's $20 Million Bet

Anthropic is a relative newcomer to the AI industry compared to OpenAI or Google. Founded in 2021 by former OpenAI researchers including Dario and Daniela Amodei, the company has positioned itself as the "AI safety-focused" alternative. But Anthropic isn't just talking about safety—they're putting serious money behind it when it matters politically.

The

What's fascinating is why Anthropic is making this bet. The company doesn't have the massive installed user base of OpenAI or the deep government relationships of Google. Anthropic's competitive advantage is its genuine commitment to AI safety research and its willingness to turn down profitable opportunities if they conflict with safety principles. The RAISE Act aligns perfectly with that brand positioning.

By spending $20 million to support Bores, Anthropic is essentially saying: "We actually believe transparency and safety should matter, and we're willing to back that belief with real political muscle." This is either genuine commitment to safety principles, or it's brilliant marketing—or both. Either way, it's a completely different political strategy than what Leading the Future is pursuing.

Public First Action's $450,000 spending is being deployed differently than Leading the Future's attack ads. Instead of negative messaging about Bores, the group is running positive ads about the importance of AI safety, responsible development, and public oversight. They're making the affirmative case for transparency, rather than just attacking the opposition.

The group is also taking a longer-term approach. While Leading the Future is focused purely on defeating Bores in this election cycle, Public First Action is building a narrative around the importance of safety-conscious AI governance. They're positioning Anthropic and their backers as the responsible actors in the industry.

What's important to note is that this isn't Anthropic being anti-innovation. The company is fully supportive of AI development and deployment. But Anthropic's thesis is that transparency actually enables more innovation in the long run, because it builds public trust in AI systems. If companies are forced to hide their safety practices and incident reports, the public will eventually demand much stricter regulation. But if companies are transparent about their practices and demonstrate they're taking safety seriously, the public becomes more comfortable with innovation.

The Broader Industry Fracture: Why This Matters Beyond New York

What's happening in New York's 12th district isn't unique to that race. It's a symptom of a much larger fracture within the AI industry about what responsible governance should look like. For the first time, we're seeing major AI companies openly fund opposing political campaigns over fundamental questions about how AI should be regulated.

Historically, the tech industry has been relatively unified on regulatory issues. When Congress held hearings on tech regulation, you might see disagreement on antitrust or privacy issues, but there was broad consensus that innovation should be prioritized and regulatory restrictions minimized. But AI has fractured that consensus.

There are several reasons for this fracture. First, different companies are pursuing fundamentally different approaches to AI development. OpenAI and Perplexity are racing to deploy capabilities as quickly as possible, betting that the value of advanced AI systems will be so obvious that society will adapt around them. Anthropic, by contrast, is betting that slower, more careful development with genuine safety focus is a better long-term strategy.

Second, the companies have different business models. OpenAI's strength is in foundational models that power other companies' products. Anthropic doesn't have that ecosystem dependency yet. Perplexity needs rapid iteration to stay competitive with Google's search dominance. For these companies, transparency requirements feel like existential threats to their strategies.

Third, there are genuine philosophical differences about the role of corporations versus government in AI governance. OpenAI and its backers believe the companies building AI should set their own safety standards, with minimal government oversight. Anthropic and its backers believe that public interest requires government involvement in setting baseline standards and oversight requirements.

What's particularly interesting is that this isn't a fight between AI companies and the anti-tech left. It's a fight within the AI industry itself about what responsible development looks like. Anthropic isn't running ads saying AI is dangerous and should be severely restricted. They're saying AI is powerful and important, but requires transparency and safety protocols to realize its potential responsibly.

This distinction matters because it legitimizes the idea that the companies building AI aren't the only legitimate voice in deciding AI policy. If the only voices were Andreessen Horowitz and OpenAI arguing for minimal regulation, you could dismiss it as corporate self-interest. But when Anthropic—which also makes money from AI—argues for transparency requirements, it suggests that reasonable people within the industry can disagree on governance approaches.

The Economics of AI Regulation: Why Transparency Costs Matter

Underneath all the political posturing, there's a real economic question: How much does transparency actually cost companies, and would those costs stifle innovation?

Leading the Future's argument is fundamentally economic. They claim that requiring safety disclosures, incident reporting, and registry participation would impose substantial compliance burdens that would disproportionately affect smaller AI companies and startups. They argue that only the best-funded companies could afford the legal and operational infrastructure to handle these requirements, thereby entrenching the incumbents and stifling competition.

It's a familiar argument from previous regulatory debates. When new compliance requirements get proposed, the industry always argues that they'll create barriers to entry. And sometimes that's true. But the empirical evidence from other industries is mixed.

Consider the FDA's drug approval process. It's expensive and time-consuming—often requiring 10+ years and $2+ billion to bring a new drug to market. This does create barriers to entry, and it does slow innovation. But most people would argue that the safety benefits of the FDA approval process justify the costs. Without it, we'd have unsafe drugs on the market causing serious harms.

The RAISE Act is nowhere near as burdensome as FDA approval. It's asking for safety disclosures and incident reporting, not pre-market approval. The compliance costs would likely be substantial but not game-changing for funded companies. A startup with decent funding should be able to handle these requirements without significant difficulty.

That said, there are legitimate concerns about compliance costs. If the registry requirements are poorly designed, they could create unnecessary burdens. If the safety disclosure requirements are too prescriptive, they could stifle innovation by locking companies into specific approaches. The devil is definitely in the regulatory details.

Anthropic's counter-argument is that transparency costs are minimal compared to the risks of a regulatory backlash if harms go unreported. If a major AI incident occurs and regulators discover that companies knew about the risks but didn't disclose them, the result would be much stricter regulation. But if companies are proactively transparent, regulators get the data they need and can work collaboratively with companies to address problems.

From an economic perspective, there's actually a decent case that transparency helps innovation in the long run. When regulators understand your safety practices and agree with your approach, they're less likely to impose stricter rules later. But when regulators are kept in the dark, they eventually demand much more extensive oversight.

The question isn't really whether transparency costs anything. It does. The question is whether the benefits of that transparency—better regulatory understanding, reduced risk of backlash, maintained public trust—outweigh the compliance costs.

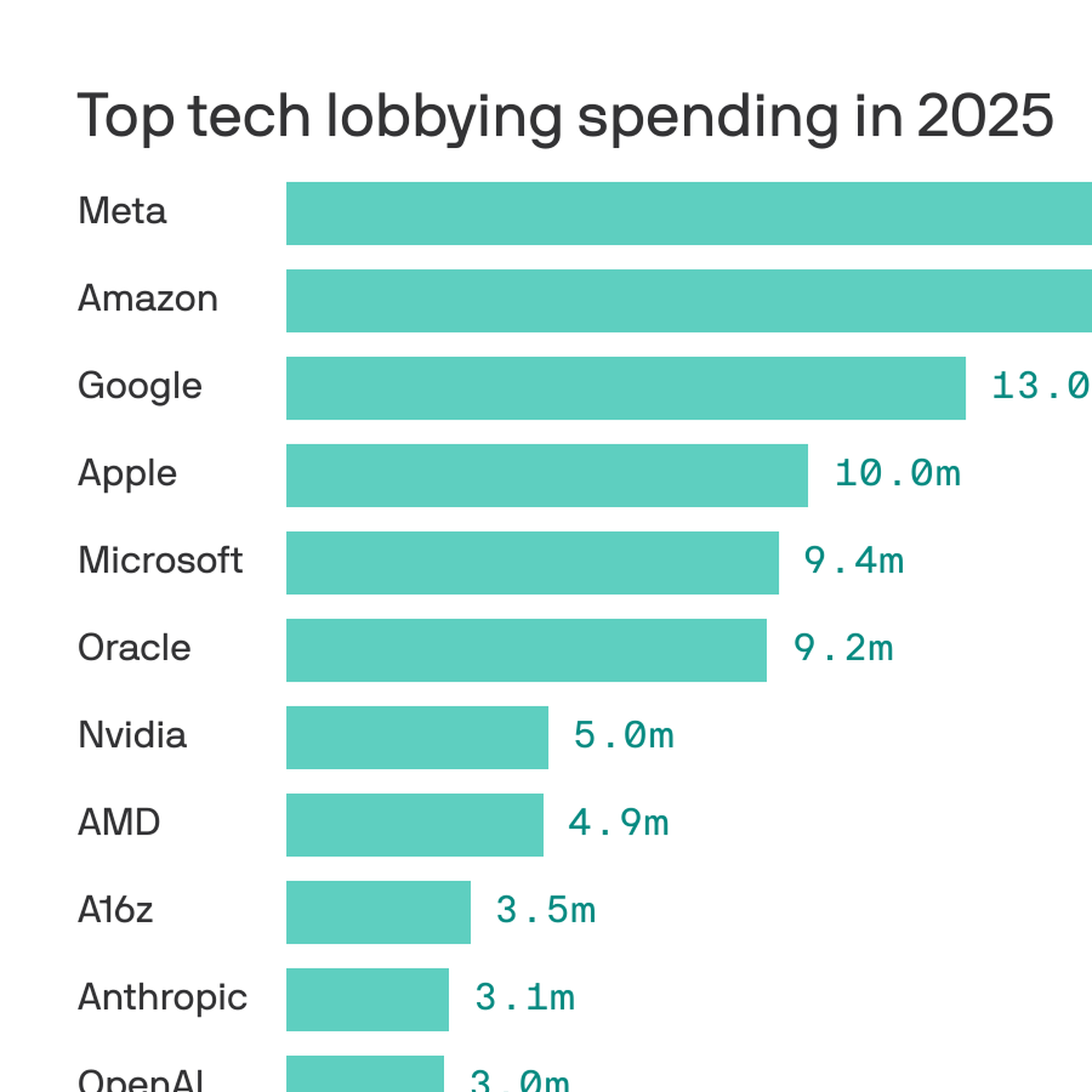

Estimated data shows Andreessen Horowitz as the largest contributor to the $100M fund, followed by OpenAI and Perplexity. Estimated data.

New York's AI Regulatory Landscape: Why This Fight Happened Here

New York isn't randomly selected as the battleground for this fight. The state has become the epicenter of AI policy debates in America, and there are specific reasons why Bores and the RAISE Act became the focal point of this industry battle.

First, New York is home to significant AI research and development. While Silicon Valley dominates venture capital, New York has major research institutions like Columbia University, NYU, and the City College of New York doing cutting-edge AI work. The state also has growing AI startup communities in Brooklyn and Manhattan. That means AI policy in New York actually affects real companies and researchers.

Second, New York has been more aggressive on tech regulation than most states. The state passed comprehensive AI bias audit requirements, passed the tech antitrust bill, and has proposed various AI safety measures. New York's legislature has shown it's willing to regulate tech in ways that other states aren't. That makes it a critical battleground—if regulation passes in New York, it's likely to influence other states and potentially federal policy.

Third, New York's assembly district 12 is politically moderate and well-educated, which makes it responsive to nuanced policy arguments. This isn't a ruby-red or deep-blue district where ideology is locked in. It's a swing area where informed voters might actually care about the specifics of AI policy. That makes it a good place for both sides to test messaging and build case studies.

Fourth, Alex Bores himself is an interesting political figure. He's not a partisan firebrand. He's a relatively moderate legislator who has supported business development while also believing in reasonable safety standards. That makes him a credible messenger for the idea that you can support AI innovation while also requiring transparency. He's not saying "AI is bad"—he's saying "AI is important and powerful, so let's have transparent oversight."

What's particularly smart about the PAC spending is that it's using New York's 12th district as a template for a national strategy. Both Leading the Future and Public First Action are essentially treating this race as a proof-of-concept for how to build political power around AI policy. If Bores wins, it validates the strategy of using pro-transparency messaging and building a coalition around responsible AI. If he loses, it validates the opposing strategy of focused spending against a specific legislative threat.

This district is also closely watched by state legislators in California, Massachusetts, and other states considering AI regulations. A Bores loss would send a powerful message that industry spending can defeat pro-regulation candidates. A Bores win would signal that voters care about AI safety and that transparency requirements are politically viable.

The Argument Against Transparency: Leading the Future's Case

To fairly understand this fight, you need to understand the genuinely compelling arguments that Leading the Future is making against the RAISE Act. It's not just corporate self-interest—there are real intellectual arguments about why transparency requirements could be problematic.

First, there's the innovation speed argument. AI is moving incredibly fast. The pace of capability improvements in large language models, image generation, and other domains is accelerating dramatically. If every significant deployment requires safety disclosures, incident reporting, and registry participation, it adds friction to development cycles. Even if the friction isn't huge, in a domain where six-month advantages translate to billions in market value, any slowdown is significant.

Leading the Future argues that this speed matters not just for companies, but for society. The companies developing advanced AI capabilities represent America's competitive advantage. If regulation slows them down while other countries (China, the EU, UK) are moving fast, America risks losing leadership in one of the most important technologies of the coming decades. From this perspective, the RAISE Act isn't just bad for business—it's bad for American geopolitical standing.

Second, there's the proprietary information argument. These companies argue that their safety protocols and testing methodologies contain valuable proprietary information that they've invested heavily in developing. Forcing them to disclose these practices to regulators—even in confidence—risks the information being leaked or subpoenaed in litigation. If competitors or regulators can see your safety testing approaches, they can work around them.

This argument isn't without merit. There's a real tension between transparency and trade secret protection. You can't truly protect intellectual property if you're required to disclose your methodologies. The RAISE Act tries to handle this by allowing in-confidence disclosures to regulators, but companies worry that courts could force disclosure anyway in litigation.

Third, there's the competitive disadvantage argument for American companies. If American AI companies are required to disclose safety practices while Chinese competitors face no such requirements, Americans are at a disadvantage. The global AI race isn't just about technical capability—it's about regulatory burden. If the U. S. imposes costly transparency requirements while competitors don't, the U. S. companies are less competitive.

Leading the Future's response to this is that the solution is global regulatory harmonization, not unilateral American restraint. But they acknowledge that unilateral requirements do create competitive problems in the short run.

Fourth, there's the "we're already doing this" argument. OpenAI, Anthropic, Perplexity, and other leading AI companies do conduct safety testing and do think carefully about misuse. They argue that forcing them to file reports and disclosures doesn't actually change their practices—it just adds bureaucratic overhead. From this perspective, the RAISE Act is security theater that makes regulators and the public feel good without actually improving safety.

These aren't silly arguments. They're the kinds of arguments that reasonable tech policy experts grapple with. The question isn't whether they're valid—they have merit. The question is whether they outweigh the benefits of transparency and oversight.

The Argument for Transparency: Why Anthropic's Bet Makes Sense

Anthropic's argument for the RAISE Act is based on a different premise: transparency is actually good for long-term AI development and adoption. This isn't a pro-regulation argument in the way traditional tech skeptics make it. It's a pro-innovation argument that happens to embrace regulation.

First, there's the trust argument. Advanced AI systems will only be broadly adopted if the public trusts them. The public won't trust AI systems if they believe companies are hiding safety information or covering up incidents. Trust requires transparency. If Anthropic is right, then companies that are transparent about safety practices will build more trust, enabling faster adoption and growth. Companies that hide their practices will face increasing skepticism and eventually much stricter regulation.

Second, there's the market signaling argument. If some AI companies are transparent about their safety practices while others refuse to disclose, the market will naturally interpret that as a sign of different safety levels. Transparent companies benefit from the signal. This creates a competitive advantage for companies that embrace transparency, even if transparency costs something.

Third, there's the harm prevention argument. The AI systems currently being deployed are already causing real harms. Fraud, non-consensual intimate imagery, misinformation, job displacement—these are happening now. If regulators don't know about these harms, they can't help address them. The RAISE Act's incident reporting requirement gives regulators the data they need to work with companies on preventing future harms.

This is particularly important because many AI harms fall into a regulatory gray zone. It's not clear whether existing laws even apply to some AI misuses. By creating clear incident reporting requirements, the RAISE Act gives regulators visibility into the problem and allows them to develop appropriate responses.

Fourth, there's the risk management argument. AI systems are powerful and have the potential for major downside risks (disinformation at scale, autonomous systems failures, etc.). Risk management best practices require that organizations understand and monitor their risks. The RAISE Act's safety protocol disclosure requirement is basically asking companies to formalize the risk management practices they should already be doing.

Fifth, there's the precedent argument. Every other regulated industry—pharmaceuticals, aviation, finance, vehicles—requires some level of safety disclosure and incident reporting. If the AI industry exempts itself from these basic oversight practices, it signals that AI is fundamentally different and doesn't need the same guardrails as other powerful technologies. That's a politically untenable position long-term.

Anthropic's deeper argument is that the AI industry's credibility depends on demonstrating that it takes safety seriously. If companies resist reasonable transparency requirements, the public will conclude that they don't actually care about safety—they only care about growth. That conclusion will eventually lead to much stricter regulation. But if companies embrace transparency, they prove their commitment to safety and maintain the trust needed for more permissive regulation.

From this perspective, Anthropic's $20 million bet on the RAISE Act isn't just about this particular law. It's about establishing norms for the entire AI industry about the importance of transparency and accountability.

Estimated data suggests that compliance costs under AI regulations could be substantial for smaller companies, potentially affecting innovation and competition.

Political Strategy: How Both Sides Are Playing the Game

Beyond the policy arguments, this fight is also interesting as a study in political strategy. Both Leading the Future and Public First Action are using sophisticated strategies to achieve their goals, and they reveal a lot about how tech policy battles are actually fought.

Leading the Future's strategy is relatively straightforward: overwhelm the opposition with money and messaging. They're outspending Public First Action roughly 3-to-1 (

The group's messaging focuses on economic arguments rather than policy substance. They're not debating whether transparency is good for AI safety—they're arguing that Bores is bad for jobs, bad for innovation, bad for New York's economy. This is a smart political move because it's easier to win an emotional argument about jobs than a technical argument about safety protocols.

Public First Action's strategy is more sophisticated but riskier. They're not just attacking their opponents—they're trying to reframe the entire debate. Instead of letting the conversation be about "should we regulate AI," they're trying to make it about "who cares about AI safety." They're building Bores as a champion of responsible innovation rather than a regulator.

This strategy requires that voters actually care about AI policy. In most races, voters prioritize jobs, schools, healthcare, and personal economics. AI safety and transparency requirements are abstract policy debates that don't obviously connect to voters' daily lives. Public First Action is betting that they can make this connection—that voters will see Bores's transparency requirements as important enough to outweigh concerns about innovation or job creation.

It's a big bet. It might work if there's a major AI incident that makes safety salient to voters. It might fail if voters never see AI safety as a personal priority. The group's strategy essentially requires that they reorder voters' priorities, which is difficult.

Both groups are also using a common political tactic: building coalitions around their positions. Leading the Future is explicitly talking about bringing together "pro-innovation" voices. They're framing their position as pro-business, pro-growth, pro-jobs. Public First Action is building a coalition around "responsible innovation" and positioning their side as thoughtful and careful rather than reckless.

The groups are also targeting different audiences. Leading the Future's attack ads are likely focused on swing voters and business-oriented Republicans. They're using economic arguments that appeal to business owners and job creation concerns. Public First Action's support ads are likely focused on educated voters and progressives. They're using arguments about responsibility, oversight, and long-term thinking that appeal to these voters.

Both sides are also thinking about precedent and future implications. A Bores loss would discourage pro-regulation candidates everywhere. A Bores win would encourage them. Both groups understand that this single race is a proxy battle for broader questions about whether tech companies can use political spending to defeat pro-regulation candidates.

The Precedent Effect: What This Race Means for Tech Policy Broadly

While the immediate question is whether Alex Bores wins re-election to the New York Assembly, the broader implication is about what precedent this sets for future tech policy battles. If you're a legislator considering introducing AI regulations, you're watching this race very carefully.

If Bores loses, the message to other legislators is clear: you can introduce reasonable-sounding AI regulations, but if the major companies oppose you, they will spend massive amounts of money to defeat you. The ROI on introducing AI regulations becomes negative. You get attacked by industry, you lose the race, and the regulations don't pass. Why would you take that risk?

If Bores wins, the message is different: you can introduce AI regulations, and even if industry opposes you with major spending, you can prevail if you build a coalition around responsible innovation. The industry's political spending isn't determinative. That emboldens other legislators to take similar positions.

This pattern has played out repeatedly in tech policy. In the early 2000s, tech companies spent heavily against regulation and mostly won those battles. It created a permissive regulatory environment that enabled rapid innovation but also led to genuine harms in antitrust, privacy, and content moderation. By the 2020s, regulators and legislators had become much more skeptical of tech industry arguments, partly because they'd seen the harms and partly because the industry's political credibility had declined.

The AI regulation battles of 2025 are at a critical inflection point. If industry spending easily defeats pro-regulation candidates, it reinforces the pattern of industry dominance. If pro-regulation candidates can win despite industry opposition, it suggests that the regulatory pendulum is swinging back toward more oversight.

What's important is that this isn't just an American phenomenon. Globally, there's pressure for more AI regulation. The EU has already passed the AI Act. The UK, Canada, Australia, and other countries are developing regulatory frameworks. The question for each country is whether they'll allow tech companies to substantially shape regulation through political spending and lobbying, or whether they'll insist on democratic processes that include multiple voices.

The Bores race is particularly important because it's not about federal policy. It's about state-level regulation. Federal regulatory battles attract tons of attention and money. But state regulations are often where the real action happens, because states can move faster than the federal government and can experiment with different approaches. If the AI industry can successfully use super PAC spending to defeat pro-regulation candidates at the state level, they maintain veto power over AI governance. But if pro-regulation candidates can win despite that spending, the industry's veto power erodes.

International Implications: How U. S. AI Policy Affects Global Development

While the RAISE Act is a New York state measure, its implications are genuinely global. The United States' approach to AI regulation influences how other countries regulate, and it affects the competitiveness of American companies versus international competitors.

The EU's AI Act, which became law in 2024, requires companies to conduct impact assessments, maintain documentation of their AI systems, and report serious incidents to regulators. These requirements are more extensive than what the RAISE Act proposes. But the EU is smaller and moving slowly on implementation. The question is whether other countries will follow the EU's more restrictive path or try to match U. S. approaches if the U. S. stays more permissive.

China has taken a middle path, requiring some disclosure and oversight but maintaining strong state control. The country has published regulations on generative AI that require companies to conduct security assessments and monitor for potentially harmful content. But the rules are focused on state security rather than consumer safety.

The UK has taken a light-touch approach, relying on voluntary industry standards and future regulatory frameworks rather than strict rules. The country's perspective is that AI is moving so fast that regulation should be adaptive and minimal.

If the U. S. continues to resist transparency requirements like the RAISE Act, it signals that American companies can compete effectively without strict safety disclosures. That helps American companies relative to those in the EU, which faces stricter requirements. But it also risks creating a regulatory arbitrage situation where companies in restrictive jurisdictions relocate to permissive ones.

Anthropic's argument is that American leadership in AI requires not just fast innovation but responsible innovation that builds public trust. If American companies are seen as cutting corners on safety to maximize speed, American leadership becomes politically vulnerable. But if American companies embrace transparent safety practices, they establish soft power and leadership.

The outcome of the Bores race could influence whether other countries move toward stricter or more permissive AI regulation. If the U. S. industry successfully defeats transparency requirements, it validates the political strategy of using spending to block regulation. Other countries' industries would try similar strategies. But if transparency requirements pass, it signals that democratic accountability can overcome industry spending, encouraging similar regulatory efforts elsewhere.

Leading the Future dominates the funding landscape with

The Safety Question: What We Actually Know About AI Safety

Underneath all the political strategy and industry fighting is a genuine scientific and philosophical question: how safe are current AI systems, and what oversight mechanisms actually reduce risks?

This is important because the entire debate assumes that transparency requirements and incident reporting would actually improve safety. But if transparency doesn't materially affect safety outcomes, then the RAISE Act is just bureaucratic overhead that slows innovation without benefit. Conversely, if transparency and oversight significantly improve safety, then the compliance costs are justified.

What we actually know is incomplete. AI systems are doing some remarkable things—having nuanced conversations, generating creative content, solving complex problems. But they also exhibit concerning behaviors: hallucinating false information with confidence, reflecting biases in training data, being used for fraud and misinformation. The question is whether these risks are manageable with current practices or whether they require additional oversight.

Anthropic's research on AI safety suggests that transparency and rigorous testing can identify problems before they cause real-world harm. The company has published research on techniques for making AI systems more interpretable, more transparent about their reasoning, and more aligned with human values. If these techniques work, then the safety benefits are real.

But OpenAI and other companies argue that transparency requirements could slow the very research needed to solve safety problems. If companies spend time complying with disclosure requirements, they have less time for actual safety research. From this perspective, the best safety strategy is to move fast and iterate, learning from real-world usage rather than trying to anticipate problems ahead of time.

This is a genuine disagreement among experts, not just a political fight. There are safety researchers who believe that pre-deployment testing and transparency are essential. There are other safety researchers who believe that iterative development with real-world feedback is the most effective approach.

The empirical evidence from other industries is mixed. The pharmaceutical industry's pre-market testing and incident reporting system has clearly prevented massive harms. But the fast-moving software industry has thrived with minimal pre-deployment testing and learned from bugs in production. Different domains might require different safety approaches.

For AI specifically, we're early enough that we don't have strong empirical evidence about which approaches work best. This uncertainty is actually at the heart of the policy debate. Leading the Future argues that we should go with the fast-iteration approach because that's what worked for software. Anthropic argues that AI is powerful enough that we should use the pre-deployment testing approach that worked for pharma. Without more evidence, reasonable people can disagree.

Economic Consequences: Who Wins and Who Loses from AI Regulation

Beyond the abstract policy questions, the RAISE Act would have concrete economic consequences for different companies and stakeholders. Understanding who benefits and who gets harmed is essential to understanding why the political coalitions are forming the way they are.

For large, well-funded companies like OpenAI, Anthropic, and Perplexity, the transparency requirements aren't catastrophically expensive. These companies already conduct safety testing and maintain documentation of their systems. The marginal cost of filing reports with regulators is substantial but manageable. The real burden is more about time and friction than money.

For startups and smaller companies, the burden is potentially more significant. If you're a 20-person startup that built an AI tool, the cost of maintaining incident reporting and safety disclosures is higher per employee than for a 500-person company. This creates barriers to entry that favor incumbents. Leading the Future's argument that the RAISE Act would entrench big companies versus startups has real merit.

For consulting firms and compliance services companies, the RAISE Act is a windfall. They would build businesses around helping AI companies comply with transparency requirements. This is why you sometimes see what looks like strange coalition-building in policy debates—companies that would profit from regulation often quietly support it, even if they're not publicly backing it.

For workers in AI companies, the consequences are ambiguous. If the RAISE Act slows innovation and reduces revenue growth, that could reduce hiring and wage growth. But if it improves public trust in AI and enables faster broader adoption, it could increase demand. The net effect on workers is unclear.

For consumers and the public, the theoretical benefit is lower AI-related harms through better oversight. If the incident reporting requirement leads to faster identification and mitigation of AI misuse, consumers benefit. But if the transparency requirements slow down beneficial innovation, consumers lose access to useful capabilities. Again, the net effect depends on implementation details.

For New York State itself, the economic consequences are particular interesting. New York has a growing AI ecosystem. If the RAISE Act makes it harder to do AI business in New York, companies might relocate to other states. But if the RAISE Act becomes a model that creates standards across the country, New York companies that embrace transparency could gain competitive advantage.

This is actually one of the fascinating aspects of the Bores race. New York is somewhat of a regulatory leader on tech issues (antitrust, privacy, AI bias audits). If the RAISE Act passes and other states follow, New York sets a national standard. But if the RAISE Act fails and industry successfully blocks it, it signals that individual states can't effectively regulate AI—the burden of different state rules is too high, so companies will lobby each state separately. That would suggest that only federal-level regulation is feasible.

Transparency Versus Proprietary Concerns: The Real Tension

One of the genuine tensions in this debate that doesn't get enough attention is the conflict between transparency and intellectual property protection. Both are valuable, but they're partially in conflict. Understanding this tension is crucial to evaluating whether the RAISE Act strikes the right balance.

On one side, companies have legitimate interests in protecting intellectual property. The specific safety testing methodologies that OpenAI uses are proprietary and valuable. If those methodologies are disclosed to competitors, even in confidence to regulators, the companies lose competitive advantage. This is a real concern, not just corporate self-interest.

On the other side, regulators need enough information to actually understand whether companies are taking safety seriously. If companies can disclose their safety practices in such vague and general terms that regulators learn nothing useful, then the transparency requirement becomes meaningless. Regulators need specific information about testing protocols, incident reports, and system capabilities.

The RAISE Act tries to handle this by allowing companies to make in-confidence disclosures to regulators. The idea is that companies disclose detailed information to the state, the state uses that information to understand safety practices and evaluate compliance, but the detailed information isn't made public. Only aggregated, anonymized incident data becomes public.

This is a reasonable compromise in theory, but there are real concerns about how it would work in practice. Once information is disclosed to a government agency, it's potentially subject to freedom of information requests, subpoenas in litigation, or leaks. Companies can't really be confident that their proprietary information will stay protected.

Furthermore, regulators might not have the technical expertise to effectively evaluate the safety information companies provide. If a company tells a regulator that they conduct safety testing using methodology X, how does the regulator know if X is actually adequate? The regulator would need substantial AI expertise to evaluate whether a company's safety practices are actually sufficient.

Anthropic's response to this is that regulatory capacity can be built. States can hire AI safety experts, can require companies to justify their methodologies, can benchmark practices across companies. Over time, regulators can develop real expertise in evaluating company claims. But this requires investment and takes time.

Leading the Future's counter-argument is that regulators will never have the same expertise as companies building AI systems. The companies have hundreds or thousands of engineers working on safety. State regulators will have a handful of experts. In this asymmetry, companies will always be able to convince regulators that whatever they're doing is adequate, whether or not it actually is.

This is actually a real concern in tech regulation. The FTC has struggled with understanding complex tech issues because the agency's capacity is small relative to the companies it regulates. Building regulatory capacity that matches industry expertise is expensive and slow.

But the alternative—letting companies self-regulate entirely—has also shown problems. Financial self-regulation in the 2000s led to the financial crisis. Tech self-regulation has led to privacy violations, monopolistic behavior, and harmful content moderation. Perfect regulatory capacity isn't necessary; you just need enough capacity that companies can't completely blow off the regulator.

Anthropic's

What the Bores Race Tells Us About the Future of Tech Politics

Regardless of who wins the Bores race, the fight itself tells us something important about how tech politics is evolving in America. For the first time, we're seeing tech companies willing to spend enormous amounts of money to fight each other over regulatory policy. This represents a fundamental shift in how the industry approaches politics.

For the first two decades of the internet, tech companies had largely aligned interests in opposing regulation. Even when competitors fought each other, they united on regulatory issues. But AI has fractured that unity. Now companies are explicitly fighting over what regulations should be.

This fragmentation reflects genuine disagreements about the future of AI, but it also reflects different competitive positions. Companies that are ahead in capability want less oversight so they can capitalize on their lead. Companies that are focused on building trust and market share through safety want more oversight to differentiate themselves. As long as companies have different competitive interests, they'll disagree on regulation.

The PAC spending is also notable because it shows that tech companies have learned to use political power effectively. They're not just lobbying quietly behind the scenes. They're building public campaigns, making ads, mobilizing voters. They're treating technology policy like a regular political issue where campaign spending and messaging matter.

This has implications for future policy debates. If companies can use PAC spending to defeat pro-regulation candidates, then regulation becomes a political question rather than a technical question. Legislators will weigh not just the merits of regulation but the political consequences of opposing industry. That shifts power toward the companies that can spend the most money.

But it also cuts both ways. If pro-regulation candidates can win despite industry opposition, it shows that industry spending isn't determinative. It shows that voters can be persuaded to prioritize regulation even when facing massive industry spending. That suggests that the political future of tech regulation depends on how effectively different sides can make their case to voters.

The outcome of the Bores race will likely influence how other companies approach political spending on tech issues. If Bores wins, you can expect to see more pro-regulation super PACs funded by companies like Anthropic. If Bores loses, other companies will become less likely to oppose industry positions, knowing that industry can outspend them politically.

Pathways Forward: How This Could Actually Get Resolved

The current situation—with major AI companies funding opposing super PACs over a single state regulation—is obviously not sustainable long-term. Something has to give. Either the RAISE Act becomes law and other states follow, or it gets defeated and industry maintains veto power over transparency requirements. Or something in between happens.

One possibility is that the RAISE Act gets modified to address some of the implementation concerns. Maybe the in-confidence disclosure process gets more robust protections. Maybe the incident reporting requirement gets more narrowly defined. Maybe the timeline for compliance gets extended. With modifications, the bill might become acceptable to more companies while still achieving the core transparency goals.

Another possibility is that industry and regulators work together to develop voluntary standards that achieve similar transparency goals without the force of law. Companies voluntarily agree to report certain incidents, voluntarily disclose certain safety practices, voluntarily participate in an industry registry. This wouldn't satisfy safety advocates, but it might be better than nothing.

A third possibility is that federal regulation emerges and supersedes state-level efforts. Congress develops national AI safety and transparency standards. Once that happens, states wouldn't need their own separate requirements. This might actually be preferable to industry because uniform federal standards are easier to comply with than a patchwork of state regulations.

A fourth possibility is that the incident reporting requirement becomes standard industry practice without regulatory mandate. If one company starts reporting AI misuse incidents and it helps them build trust and capture market share, other companies follow. Transparency becomes competitive advantage rather than regulatory burden.

The most likely outcome is some combination of these pathways. The RAISE Act or something like it probably passes in some form, gets modified to address implementation concerns, and becomes a model for other states. The resulting patchwork of state regulations creates pressure for federal harmonization. Eventually, companies adopt transparency practices that become industry standard, partly because regulations require it and partly because it becomes competitive advantage.

This is the pattern we've seen with other tech regulations. Privacy regulations started at the state level (California), got modified, spread to other states, and eventually federal standards emerged. Data security started as voluntary best practices, became industry standard, and eventually got embedded in regulations. The same thing is likely to happen with AI transparency.

The question isn't really whether AI companies will eventually operate under transparency requirements. They will. The question is whether that transition happens through industry leadership and voluntary adoption, or through political battles and regulation. The Bores race is one battle in that larger war.

The Investment Perspective: Why VCs Are Divided on AI Regulation

One aspect of this fight that doesn't get enough attention is the venture capital dimension. Andreessen Horowitz and other leading VCs are funding opposition to the RAISE Act, while other investors might be more supportive of transparency requirements. This reflects a genuine divide in how different investors think about AI's future.

Andreessen Horowitz has made enormous bets on AI companies that are focused on capability and speed. The firm has invested in companies building foundation models, image generation tools, search engines, and other cutting-edge AI applications. For these companies, transparency requirements and incident reporting create friction. So a 16z opposes regulations that would slow these companies down.

But other investors are betting on companies that are focused on AI safety, responsible development, and compliance. Anthropic is one of these companies. For investors backing Anthropic, transparency requirements are a feature not a bug. They help Anthropic differentiate itself as the "responsible" AI company and build a moat around that positioning.

This reflects a deeper divide in investment thesis about AI's future. Some VCs believe AI will be safe and beneficial as long as it develops quickly. Slow regulation risks giving leadership to other countries and missing massive opportunities. This group funds fast-moving companies and opposes regulation.

Other VCs believe AI will be safe and beneficial only if it develops responsibly. They think the risks of uncontrolled AI development are real and that transparency and oversight reduce those risks. This group funds companies with safety focus and supports smart regulation.

Over time, these different investment theses will be tested by reality. If fast AI development proves safe, the anti-regulation investors will have been right. If transparency and oversight prove essential to managing AI risks, the pro-regulation investors will have been right. We won't know for years which side had it right.

What's interesting is that this split within the VC community mirrors a split within the AI research community. Some AI safety researchers believe the primary risk is that AI development is too fast and uncontrolled. Other AI researchers believe the primary risk is that regulation will slow beneficial development. These genuinely different views drive investment decisions and political positions.

The outcome of the Bores race could influence future VC positions on AI regulation. If the pro-regulation side wins, it validates the thesis that transparency requirements are politically viable and potentially valuable for building trust. That could lead more VCs to fund companies with safety focus. If the anti-regulation side wins, it validates the thesis that industry spending can defeat regulatory threats. That could lead VCs to fund more aggressive, regulation-resistant companies.

Lessons From Other Industry Battles: What History Tells Us

The AI regulation battle isn't the first time a new technology has faced similar fights. There are lessons from previous battles over pharmaceutical regulation, auto safety, airline safety, and other domains that give us some perspective on what might happen with AI.

In the pharmaceutical industry, initially there was minimal regulation. Companies could sell whatever they wanted. Then thalidomide happened—a drug that caused severe birth defects. The public outcry was enormous. The FDA got authorization to require pre-market testing. The industry resisted, arguing that safety requirements would stifle innovation. But over time, the industry adapted. Companies built safety testing into their development process. Pre-market testing became standard practice. And pharmaceutical innovation has continued robustly despite the regulatory burden.

The key lesson from pharma is that regulation doesn't actually prevent innovation. It changes how innovation happens. Companies have to invest in safety testing rather than just capability development, but innovation continues.

In the automotive industry, initially there were no safety regulations. Cars were engineered for performance, not safety. Then Ralph Nader published "Unsafe at Any Speed," documenting safety problems. The government mandated safety features like seatbelts, crumple zones, and airbags. The industry predicted that these requirements would kill the auto industry. They were wrong. Safety became standard practice and innovation continued.

The key lesson from autos is that once a regulation becomes industry-standard, companies compete on how to comply efficiently rather than opposing the regulation itself. Once every car had seatbelts, companies stopped complaining about seatbelts and started competing on seatbelt quality and design.

In aviation, the industry has extensive safety requirements: regular inspections, incident reporting, crew training standards. These requirements make aviation very safe—it's statistically the safest mode of transportation. Some people claim the industry opposed these requirements initially, but actually the industry largely supported them because safety incidents were expensive. Regulation helped reduce costs by preventing incidents.

The key lesson from aviation is that regulation can actually improve industry outcomes by addressing collective action problems. No individual airline wants to have safety incidents, but if some airlines can save money by cutting safety corners, the market pressure forces all airlines to cut corners too. Regulation levels the playing field and allows all companies to invest in safety.

For AI, similar dynamics might play out. If transparency becomes standard practice, all companies will build it into their development. Innovation will continue, but it will be innovation within a framework of safety and oversight.

The history also suggests that fighting regulation is a losing long-term strategy. Industries that resist regulation eventually face stricter regulation after incidents prove that voluntary compliance doesn't work. Industries that embrace smart regulation tend to face lighter regulation and more public trust. From this perspective, Anthropic's strategy of backing transparency requirements might be smarter long-term than Leading the Future's strategy of fighting regulation.

Looking Forward: What Happens After the Election

The immediate question is who wins the Bores race. But the more important question is what happens next. Regardless of the outcome, AI regulation debates will continue in New York and other states. Here's what we might expect.

If Bores wins, expect to see more pro-regulation candidates running on AI safety platforms. The victory validates the strategy and emboldens other legislators. You'll see RAISE Act-like bills introduced in other states. You'll see more venture capital backing pro-regulation candidates. The political calculus shifts toward regulation.

If Bores loses, expect the opposite. Pro-regulation candidates will become more cautious. The industry will feel emboldened to oppose other transparency requirements. You'll see less venture capital backing pro-regulation candidates. But you'll also likely see increased pressure for federal regulation, because state-level defeats create urgency around federal action.

Either way, expect the fight to intensify. As AI becomes more central to the economy and society, regulation becomes inevitable. The question is what form that regulation takes. Will it be built in collaboration with the industry, or through political battles? Will it be light-touch transparency requirements, or heavy-handed approval processes? Will it happen at the state, federal, or international level?

The companies involved are also thinking long-term. Anthropic's

Similarly, Leading the Future's $100+ million spending isn't just about defeating Bores. It's about establishing that the pro-innovation coalition has the resources and will to defeat pro-regulation candidates. It's about deterring future candidates from taking pro-regulation positions. The battle is both immediate and about setting precedents.

The outcome will reverberate for years. It will influence how venture capitalists fund AI companies. It will influence which positions politicians take on AI. It will influence how international regulators approach AI policy. A single New York congressional race has outsized implications.

The Bigger Picture: What This Means for AI's Future

At the deepest level, the Bores race and the broader fight over AI transparency is about what kind of AI future we want to build. Is AI going to be developed primarily by companies chasing capabilities and market share? Or will it be developed by companies that are also deeply invested in safety and responsible deployment?

Both models can coexist. Different companies will take different approaches. But the question is which approach becomes the norm. If transparency and safety become competitive requirements rather than differentiators, then the entire industry moves toward more responsible practices. If transparency remains optional and competitive advantage goes to companies moving fastest, then the industry remains primarily speed-focused.

The RAISE Act is a modest attempt to shift the norm. It doesn't ban capability development or restrict innovation. It just requires that companies be transparent about their practices and report incidents. If even this modest requirement causes massive industry opposition, it suggests the industry isn't yet ready to embrace transparency as normal.

Anthropic's willingness to fund the opposite position is significant because it suggests that at least one major AI company thinks transparency is important enough to fight for politically. If Anthropic is right that transparency is good for long-term innovation and adoption, then other companies will eventually come to the same realization. The question is whether that happens through voluntary adoption or through political fights.

The outcome of the Bores race will influence that timeline. A victory for transparency advocates accelerates the transition. A victory for industry opponents delays it. Either way, the underlying pressure for more oversight of powerful AI systems isn't going away. The only question is how that oversight gets implemented.

FAQ

What is the RAISE Act?

The RAISE Act is a New York state bill that requires major AI developers to disclose their safety testing protocols and report serious misuse of their systems to state authorities. The bill also establishes a public registry of AI safety incidents. It's designed to increase transparency and oversight of AI development without restricting capability development or innovation.

Why are AI companies fighting over the RAISE Act?

AI companies have fundamentally different views on what responsible AI development looks like. OpenAI and its backers believe that rapid capability development with minimal oversight is the best path forward. Anthropic and its supporters believe that transparency and safety practices are essential for building public trust and responsible long-term development. The political fight over the RAISE Act is a proxy battle for these different visions.

How much money is being spent on the Bores race?

Leading the Future has spent over

What happens if Bores loses the election?

If Bores loses, it sends a strong signal that industry spending can defeat pro-regulation candidates. This would likely discourage other legislators from introducing similar AI transparency bills. It would validate the anti-regulation coalition's political strategy. However, a Bores loss might also increase pressure for federal-level AI regulation, because it demonstrates that state-level regulation can be blocked through industry spending.

What happens if Bores wins the election?

If Bores wins, it validates the strategy of building a pro-regulation coalition and suggests that voters care about AI safety and transparency. It would likely encourage other states to introduce RAISE Act-like bills. It would demonstrate that industry spending, while powerful, isn't determinative. It might also increase investment in pro-regulation candidates and policies.

How does the RAISE Act compare to regulations in other countries?

The EU's AI Act is more comprehensive than the RAISE Act, requiring impact assessments and maintaining detailed documentation of AI systems. The RAISE Act is more focused on transparency and incident reporting. Most countries are somewhere in between—requiring some oversight without being as restrictive as the EU. The RAISE Act represents a relatively moderate approach to AI regulation compared to international standards.

Why is Anthropic backing a $450,000 spending campaign for one state legislative race?

Anthropic is backing the campaign because it aligns with the company's core positioning as a safety-focused AI developer. A Bores victory would validate the approach of prioritizing safety and transparency. It would establish that responsible companies can win political battles. It would also build Anthropic's brand as the company that actually cares about responsible AI, which is a valuable competitive differentiator.

Could the RAISE Act actually hurt AI innovation?

The transparency requirements and incident reporting could add some compliance costs and friction to development. However, similar requirements in other industries haven't prevented innovation—they've just made innovation happen within a regulatory framework. Whether the costs outweigh the benefits depends on whether transparency and oversight actually reduce harms and build public trust in AI systems.

What would happen if AI companies refused to comply with the RAISE Act?

If the RAISE Act passes and companies refuse to comply, the state could pursue enforcement through fines, cease-and-desist orders, or preventing companies from operating in New York. However, the practical enforcement would be challenging because many AI systems are deployed online and aren't clearly limited to specific states. Enforcement would likely focus on companies with clear New York presence or headquarters.

How does this relate to federal AI regulation?

The Bores race is part of a broader pattern where states are moving faster than the federal government on AI regulation. The pressure for federal-level standards is growing, partly because companies want uniform rules rather than a patchwork of state regulations. The outcome of state-level battles like the Bores race influences whether federal regulation becomes necessary to simplify compliance.

The fight between Anthropic-backed supporters and OpenAI-aligned opponents over the RAISE Act represents something unprecedented in tech politics: major companies in the same industry openly fighting each other over regulatory policy. It's a sign that the AI industry's consensus about regulation has fractured, and that fundamental questions about how AI should be governed are no longer settled.

The stakes are enormous, not just for New York but for how AI governance develops globally. The outcome will influence investment decisions, regulatory approaches, and how companies prioritize speed versus safety. In many ways, this single congressional race is a referendum on what kind of AI future we want to build—one defined by rapid capability development, or one defined by responsible oversight.

What makes this particularly important is that both sides have legitimate points. There are real risks to transparency requirements slowing innovation. There are also real risks to uncontrolled development without public oversight. The question isn't whether one side is entirely right and the other entirely wrong. It's about how to balance innovation with responsibility, speed with safety, corporate autonomy with public accountability.

The Bores race is the first major political battle over these questions. It won't be the last. As AI becomes more central to society, these battles will only intensify. The winner of this race will have outsized influence on how those future battles play out. That's why companies are willing to spend millions on a single state legislative election. The precedent being set is far more valuable than the immediate outcome.

Key Takeaways

- Anthropic's $20M super PAC funding for Alex Bores represents the first time a major AI company has openly funded opposition to industry-backed political campaigns over regulatory policy

- The RAISE Act requires transparency in AI safety protocols and incident reporting—a modest requirement that the broader industry views as an existential threat to innovation speed

- Leading the Future's $100M+ coalition (Andreessen Horowitz, OpenAI, Perplexity, Palantir) represents a fracturing consensus in the AI industry about the proper balance between innovation and oversight

- The Bores race outcome will set precedent for whether industry political spending can defeat pro-regulation candidates, influencing AI policy strategies in California, Massachusetts, and other states

- Transparency requirements don't prevent innovation in other industries (pharma, aviation, autos)—they change how innovation happens by forcing companies to account for safety alongside capability

Related Articles

- AI Safety vs. Military Weapons: How Anthropic's Values Clash With Pentagon Demands [2025]

- OpenAI's 100MW India Data Center Deal: The Strategic Play for 1GW Dominance [2025]

- AI in Cybersecurity: Threats, Solutions & Defense Strategies [2025]

- How an AI Coding Bot Broke AWS: Production Risks Explained [2025]

- The OpenAI Mafia: 18 Startups Founded by Alumni [2025]

- AI Agent Scaling: Why Omnichannel Architecture Matters [2025]

![AI Industry Super PACs: The $100M Battle Over Regulation [2025]](https://tryrunable.com/blog/ai-industry-super-pacs-the-100m-battle-over-regulation-2025/image-1-1771621670409.jpg)