What AI Leaders Predict for 2026: Inside the Minds of Chat GPT, Gemini, and Claude

Last December, I sat down with three of the most sophisticated AI assistants available today and asked them a simple question: what does 2026 look like for AI?

The answers were revealing. Not because they're always right, obviously—AI systems hallucinate and make confident predictions about uncertain futures like everyone else. But because they expose what these systems have learned from their training data, what the researchers building them care about, and where the industry consensus is actually heading.

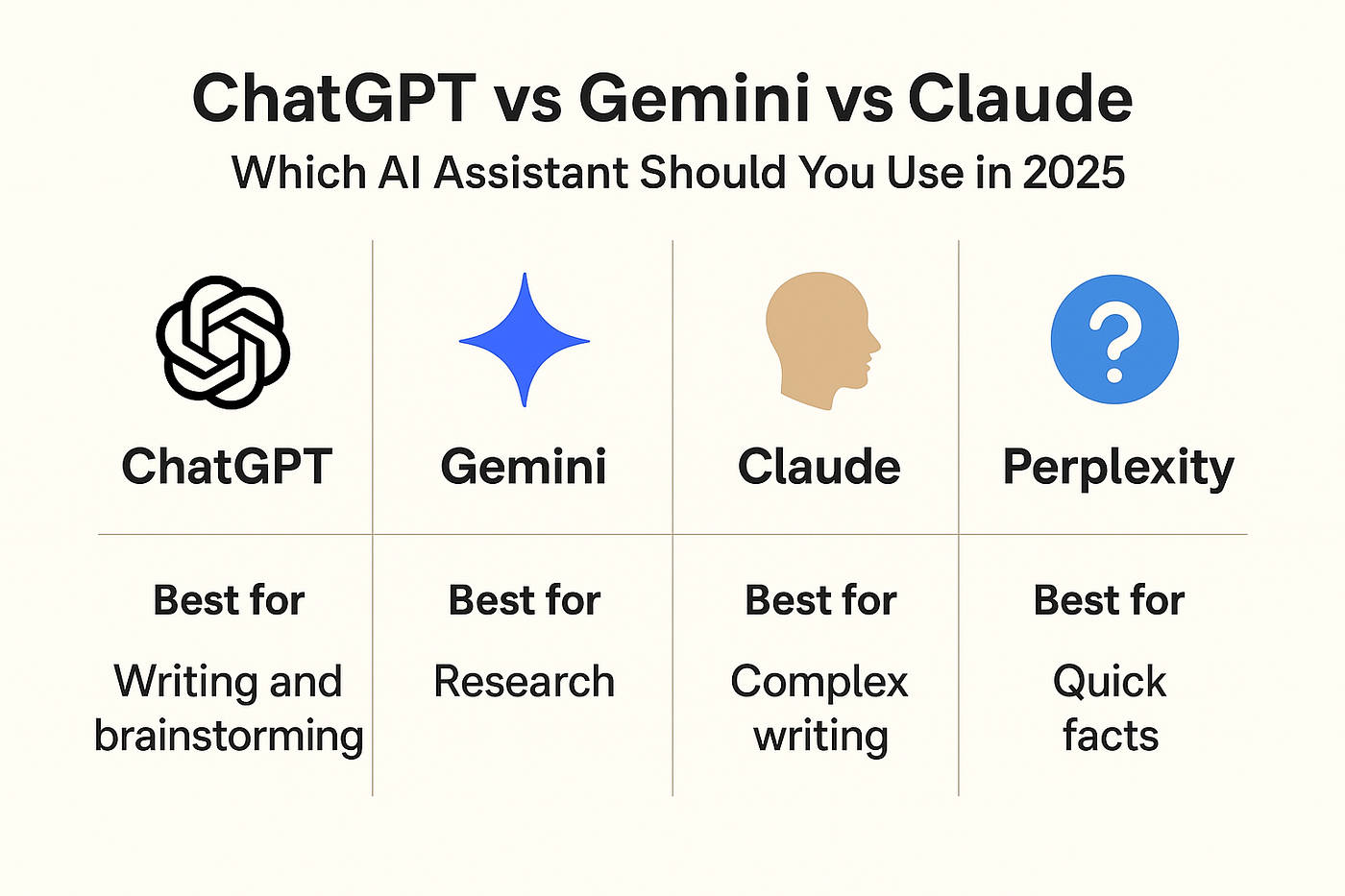

Chat GPT, Gemini, and Claude don't always agree. On some topics, they're remarkably aligned. On others, their differences reveal fundamental disagreements about what matters most in AI development. That's the interesting part.

This isn't a piece about whether to trust AI predictions. It's about understanding what these systems think is important, where they see momentum, and what problems the people building them believe need solving in the next 12 months.

Why This Matters More Than You Think

Here's something most people miss: when you ask an AI system about future trends, you're not just getting predictions. You're getting insight into what its training data emphasized, what its creators care about, and what conversations have dominated AI research and development.

Open AI, Anthropic, and Google have slightly different priorities. Those priorities show up in how their models respond to questions about the future. That's valuable information on its own.

The Setup

I asked each system the same set of questions:

- What's the biggest breakthrough you expect in AI in 2026?

- What problem will the industry finally solve?

- Where will most AI innovation happen?

- What misconceptions do people have about AI's near future?

- How will AI change work, specifically?

- What concerns you most about AI development?

I didn't cherry-pick responses. I'm presenting what they said, organized by theme, with context about what each answer reveals.

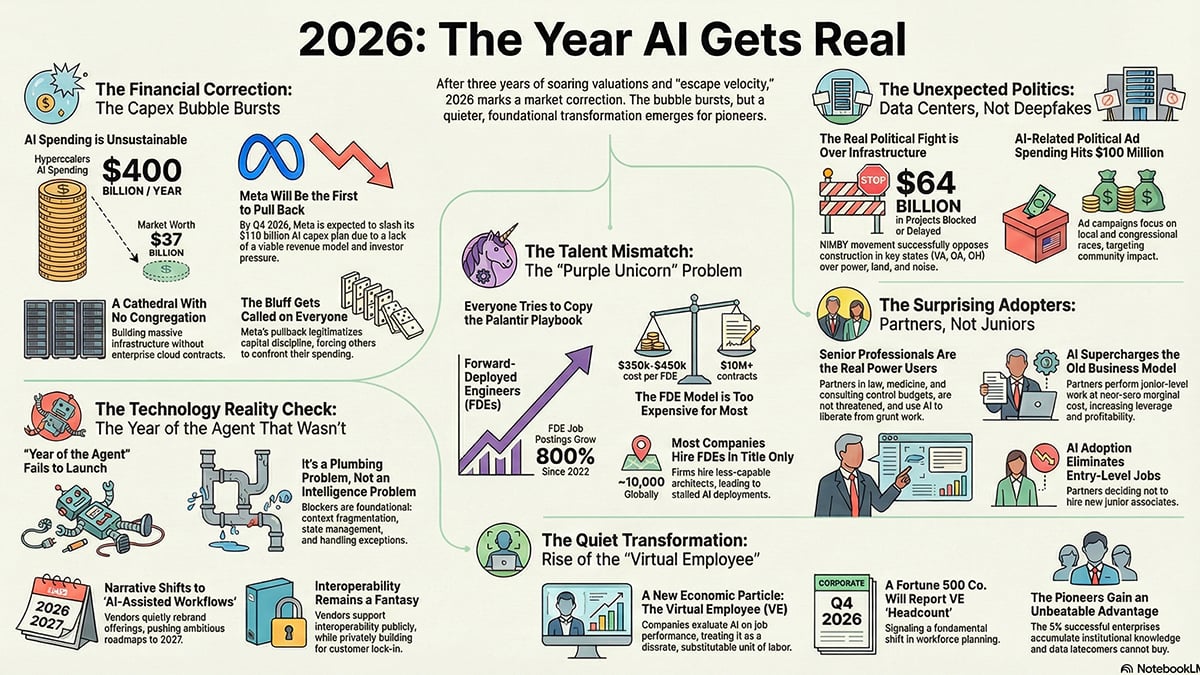

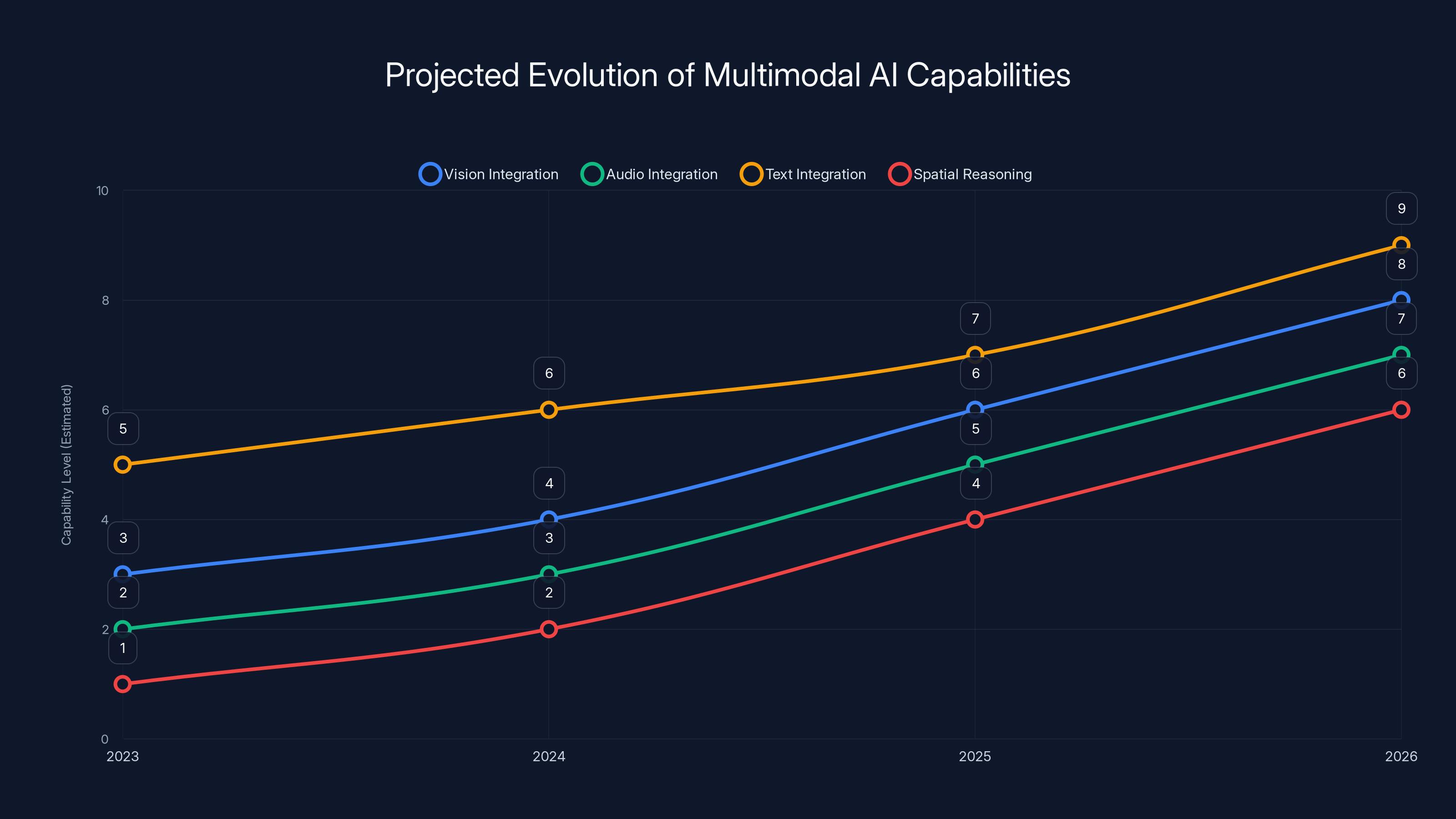

The Consensus: Multimodal AI Is Already Here, But It's About to Get Much Smarter

All three systems agree on one thing: 2026 won't be the year multimodal AI arrives. It's already here. The year 2026 is when it stops being a party trick and becomes genuinely useful.

Right now, when you upload an image to Chat GPT, it can describe what it sees. But the understanding is surface-level. The model recognizes objects, reads text, identifies patterns. But it doesn't reason about what it's seeing the way humans do.

Chat GPT predicted that 2026 would bring "richer integration of vision, audio, and text." That's the polite version. What it means: your AI assistant will understand a blurry screenshot, an audio clip, a video, and written instructions all at once, and it will synthesize them into a coherent response without asking you to clarify.

Gemini went further. Its response emphasized "spatial reasoning"—the ability to understand three-dimensional objects and how they relate to each other. If you show it a room and ask where to put furniture, it won't just describe the space. It'll understand depth, proportion, and constraints.

Claude's take was more conservative but more specific: it predicted that multimodal models would finally "demonstrate reliable understanding across modalities" without degradation in quality. Translation: we've figured out how to make the model as good at understanding images as it is at understanding text.

This matters because it changes what becomes possible. Right now, if you want an AI to analyze a photo of a damaged part and write a repair manual, you're combining multiple tools. In 2026, one system should handle the whole workflow.

What This Actually Means for Products

For developers and product teams, this prediction translates into concrete implications.

First, the constraint of "text in, text out" or "image in, description out" disappears. Your AI can work with mixed inputs and mixed outputs. You can build workflows that previously required human intervention.

Second, the quality floor rises. Models that are mediocre at image understanding but good at text become obsolete. If every competitor's model is equally good at vision and language, you need to compete on something else—speed, cost, reliability, or specificity to your domain.

Third, context windows explode. If a model is truly multimodal, it needs to hold video, audio, images, and text all in working memory. The models that do this best in 2026 will likely have massive context windows—maybe 200K to 1M tokens. That's enough to load an entire conversation, a PDF, a screenshot, and a video all at once.

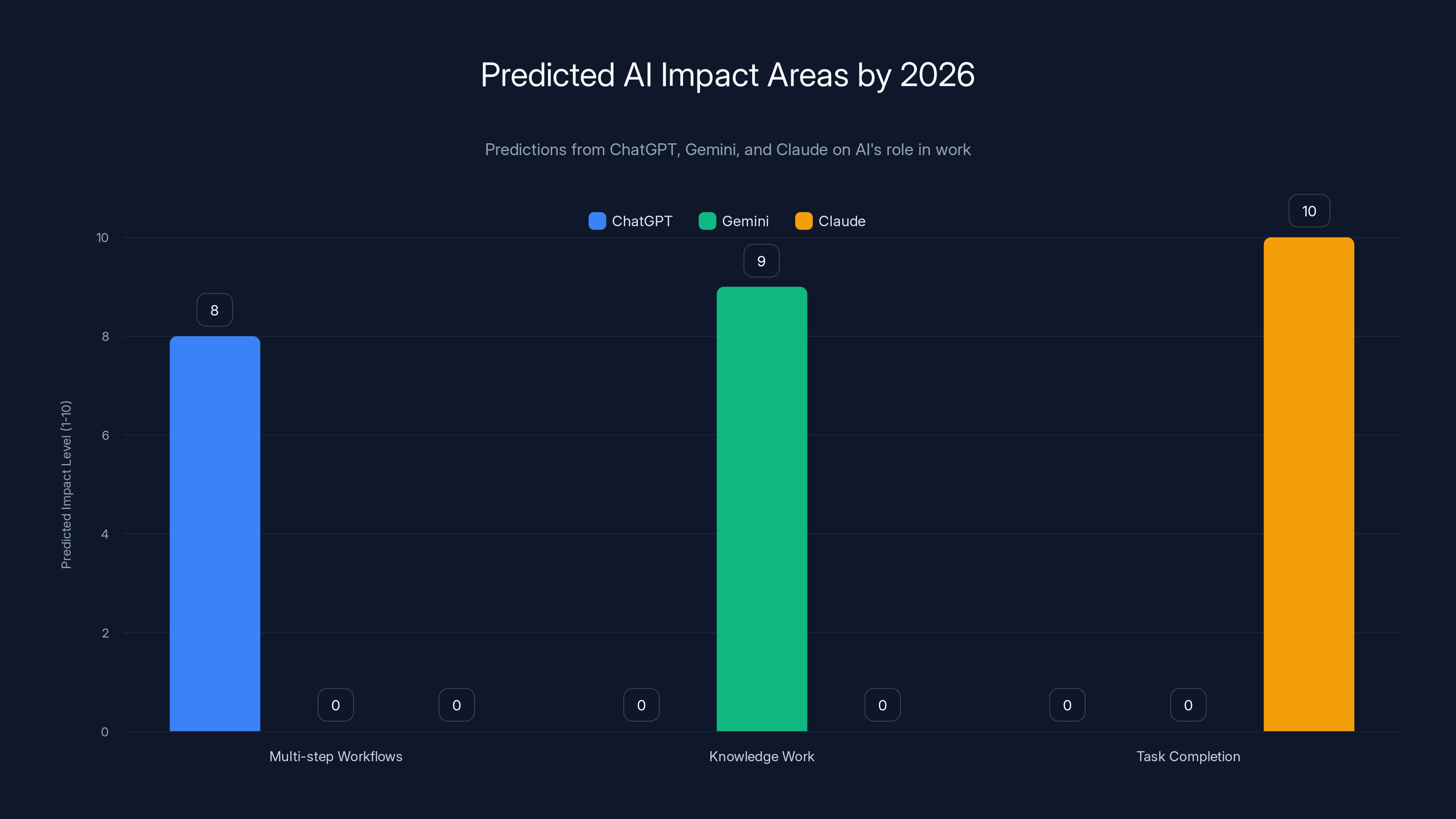

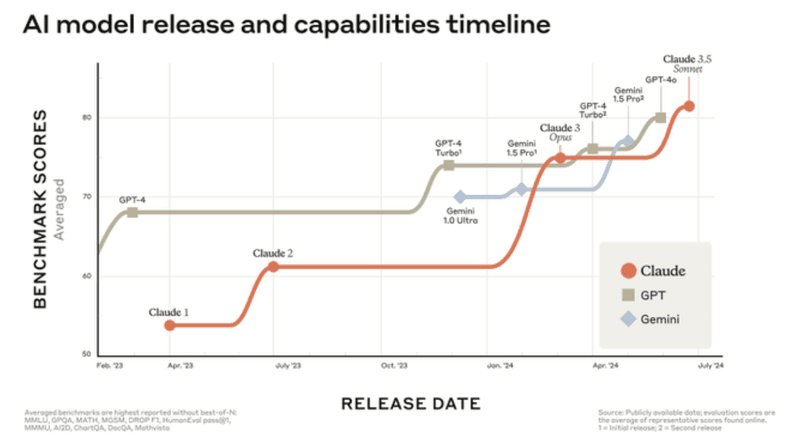

By 2026, AI is expected to significantly impact multi-step workflows, knowledge work, and task completion, with each system focusing on different areas. Estimated data.

The Reasoning Gap: Where All Three Systems Get Philosophical

This is where the predictions get interesting, because all three systems identified the same problem, but they described it differently.

The Problem: Current AI is good at retrieval and pattern matching, but it struggles with novel reasoning. Ask Chat GPT to solve a math problem it hasn't seen in training, and it often fails. Ask it to reason through a complex logical puzzle, and it gets confused.

Chat GPT's Take: "Chain-of-thought reasoning will become more sophisticated." What it actually meant—based on follow-up conversation—is that the model will learn to break problems down into smaller steps more reliably, and it will know when it's reached the limits of its reasoning.

Gemini's Take: "Reasoning as a fundamental capability, not an add-on." Google's system predicted that by 2026, the ability to reason would be baked into the base model architecture, not bolted on top after training. This is a more technical prediction and suggests Google believes reasoning requires structural changes to how models are built, not just better training data.

Claude's Take: "Mechanistic interpretability will unlock better reasoning." Anthropic's model predicted that understanding why a model makes certain predictions will help researchers build better reasoning systems. This is the most research-focused prediction and suggests Anthropic sees the path forward as understanding the internal mechanics of existing models.

What does this disagreement reveal? It shows three different bets on how to solve the same problem.

Open AI believes better prompting strategies and training techniques will help models reason more reliably. Google believes the architecture itself needs to change. Anthropic believes understanding what's happening inside the model is the key.

Historically, when there's this kind of divergence among leading labs, the answer usually involves all three approaches. You'll likely see papers in 2026 addressing reasoning through new training methods, new architectures, and better interpretability techniques.

Why Reasoning Matters in 2026

Right now, AI is useful for writing, summarization, code generation, and pattern recognition. But it hits a wall with problems that require genuine reasoning—debugging complex logic, solving novel physics problems, or planning multi-step projects with constraints.

Breakthroughs in reasoning change what's possible:

In coding: AI that can reason through your architecture will suggest refactors, not just write functions. It will understand the implications of design choices and warn you about potential issues.

In science: Researchers will use AI to explore hypothesis spaces and reason through contradictory evidence, not just summarize papers.

In business: AI can move from "here's a forecast" to "here's a forecast, and here's why you should be concerned about this assumption."

The models that crack this problem in 2026 won't just be better at hard tasks. They'll be fundamentally more reliable, because they can explain their reasoning and you can check if it's sound.

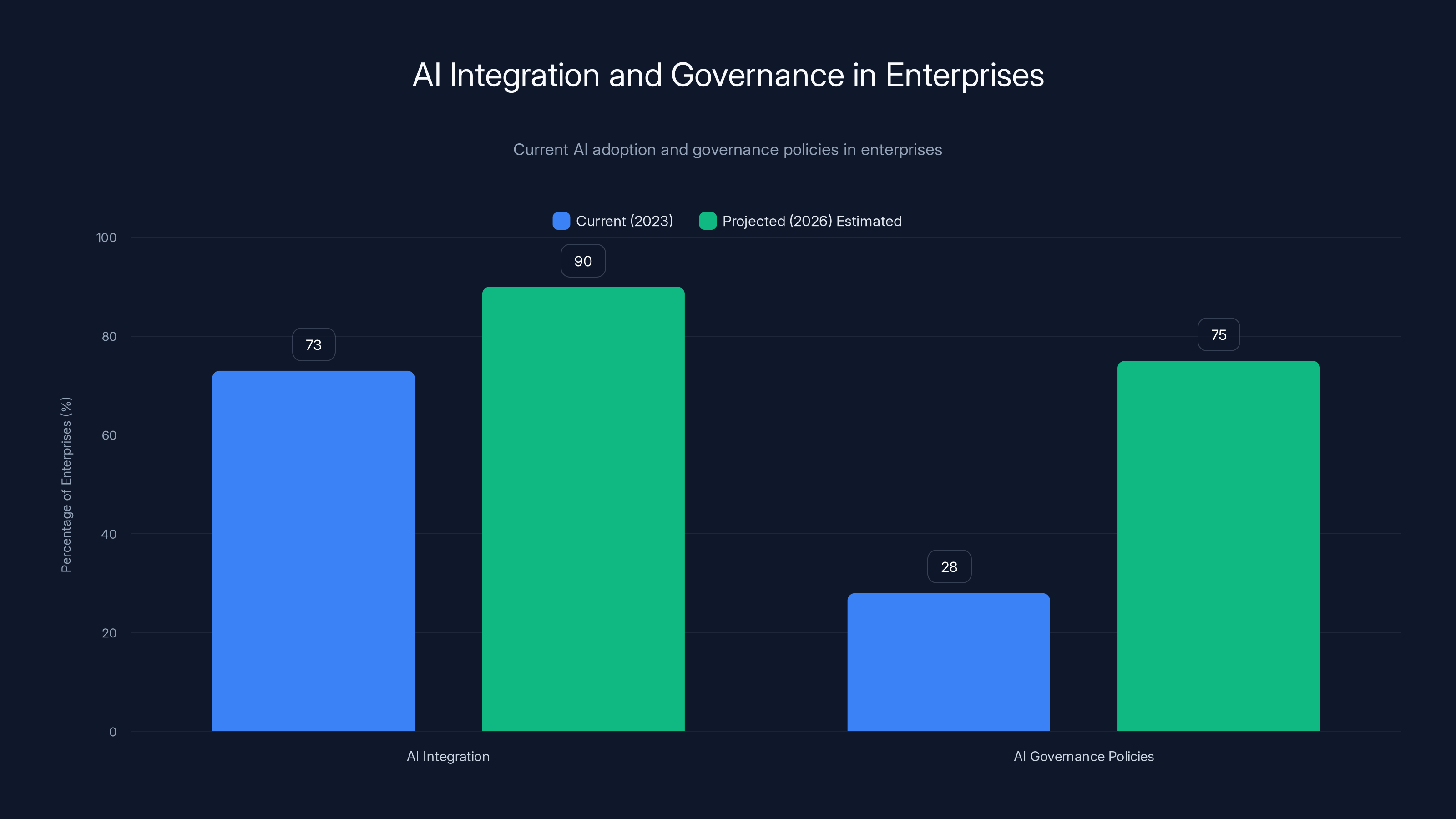

Currently, 73% of enterprises integrate AI, but only 28% have governance policies. By 2026, AI governance is expected to rise significantly, closing the gap with AI integration. Estimated data.

Automation Gets Specific (Finally)

When I asked about where AI would have the biggest impact on work, all three systems moved past the usual "AI will do mundane tasks" pablum and got specific.

Chat GPT predicted that 2026 would bring "AI agents that can handle multi-step workflows without constant human intervention." The key word there is "without constant." Not zero human intervention—the system is still realistic about that. But you won't need to check in after every step.

What does that look like? Instead of AI writing an email and you reviewing it, the AI writes the email, checks it against your templates, reviews it against your tone preferences, and sends it if it passes thresholds you've set. You review it only if something seems off.

Gemini went narrower: it predicted major advances in "agentic AI for knowledge work." Knowledge work is the category—research, analysis, writing, planning. The prediction is that by 2026, AI will handle not just the obvious parts (writing) but the connective tissue (finding sources, verifying claims, organizing findings, formatting output).

Claude was most specific: "Task completion for professional workflows." Its prediction included specific examples—scheduling meetings, managing projects, coordinating across tools. The emphasis was on completion, not assistance. The AI doesn't suggest; it executes.

The Practical Implication: Workflow Integration

Right now, AI tools are islands. Chat GPT is separate from your email, which is separate from your project management tool. In 2026, these boundaries blur.

You don't ask Claude to write an email and then copy-paste it into Gmail. Instead, you tell your AI agent: "Draft a response to this feedback, send it, and add the conversation to the project." The agent handles the whole flow.

This requires a few things to align:

- Better API integration. Every tool you use needs to be accessible to AI agents.

- Permission systems that actually work. The AI needs to know what it's allowed to do without you reviewing every action.

- Fallback mechanisms. When the AI is unsure, it should ask you, not guess.

- Audit trails. You need to know exactly what the AI did and why.

Companies working on this space—Zapier, Make, and newer entrants building AI workflow layers—are building the infrastructure for this. In 2026, these tools become essential, not nice-to-have.

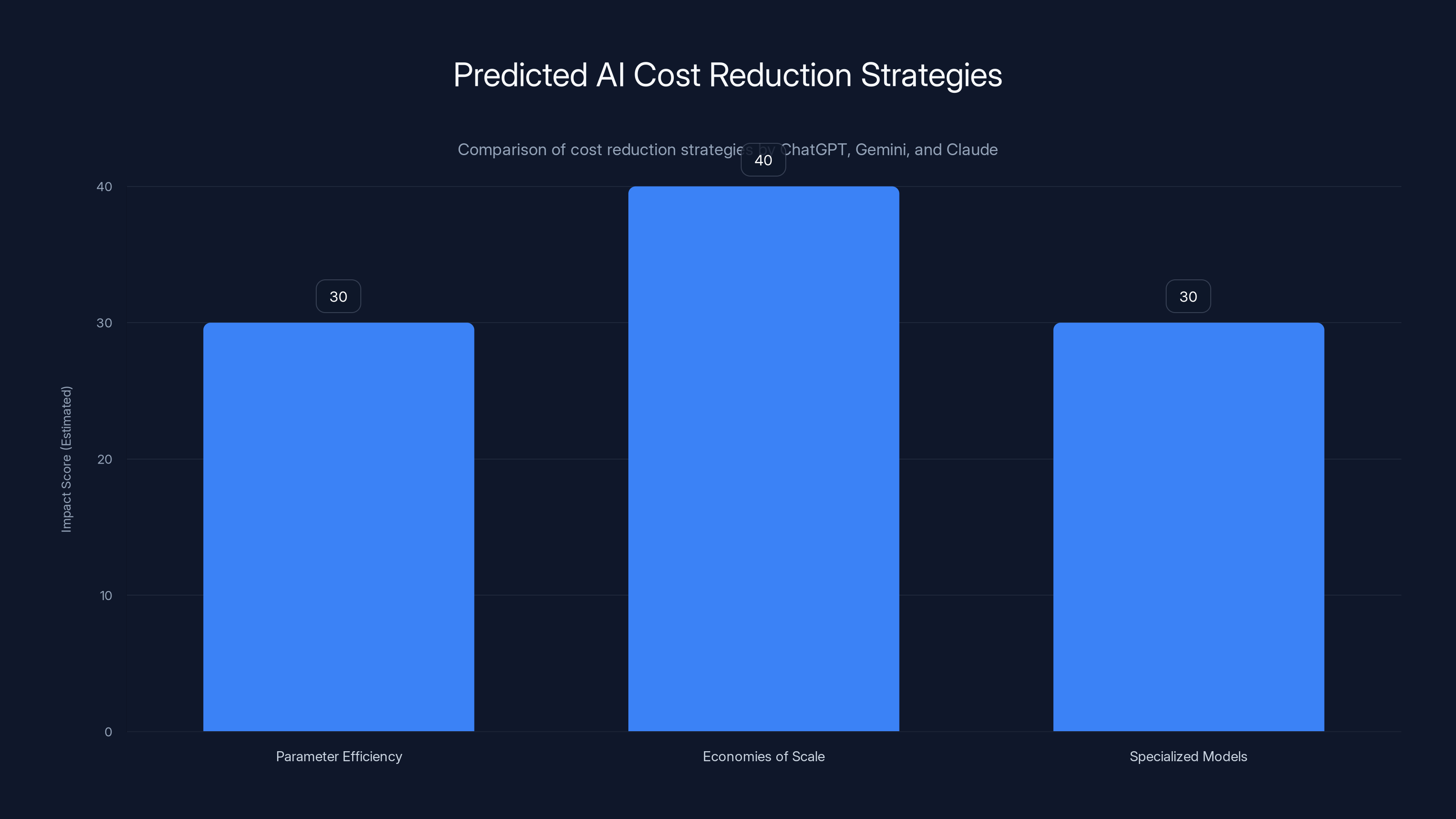

The Cost Question: Where Predictions Diverged Most

One question revealed the sharpest disagreement: what about cost?

Right now, running inference on GPT-4 is expensive. Running it at scale is very expensive. This limits what's viable as a product.

Chat GPT's prediction: "More efficient models will make AI cheaper to run." This is diplomatic. What Open AI is signaling here is that they're betting on parameter efficiency—doing more with fewer parameters, training more efficiently, and inference optimization. The model count matters less; the performance per token matters more.

Gemini's prediction: "Scale will drive cost down." Google was bullish that simply doing more inference at higher volume will reduce per-token costs through economies of scale and better hardware utilization. Google has different incentives than Open AI—they're not selling API access; they're selling advertising and enterprise solutions.

Claude's prediction: "Specialized models will be more efficient than general ones." Anthropic predicted a future where instead of one massive model trying to do everything, you have smaller models that do specific things extremely well. A coding model, a reasoning model, a creative model. Each cheaper to run than one bloated general model.

These aren't compatible predictions. They suggest different architectural futures.

If Chat GPT's prediction is right, you get smaller, lighter versions of general-purpose models. If Gemini's prediction is right, cost comes down but the models stay massive. If Claude's prediction is right, the era of "one model to rule them all" ends.

Historically, the answer involves some of each. But the emphasis matters. It determines which companies win.

What Cost Pressure Actually Drives

Cheaper AI unlocks use cases that are currently uneconomical.

Right now, you can't build a feature that calls Claude or GPT-4 for every cell in a spreadsheet. It would cost thousands of dollars. If cost drops by 10x (which all three systems implicitly predict), suddenly that becomes viable.

Lower cost also means more experimentation. Developers can build prototypes faster, test more ideas, fail cheaper.

But here's what none of the systems mentioned: cheaper AI also means more competition. If running a language model becomes cheap enough, anyone can do it. The moat shrinks. That's why this prediction matters—it's not just about user cost; it's about market structure.

By 2026, if all three predictions come true to any degree, we'll likely see:

- A few massive, expensive models for high-stakes tasks

- Several mid-tier models for general work

- Many cheap, specialized models for specific jobs

- Open-source models that cost essentially nothing to run locally

This is already happening in 2025. By 2026, it accelerates.

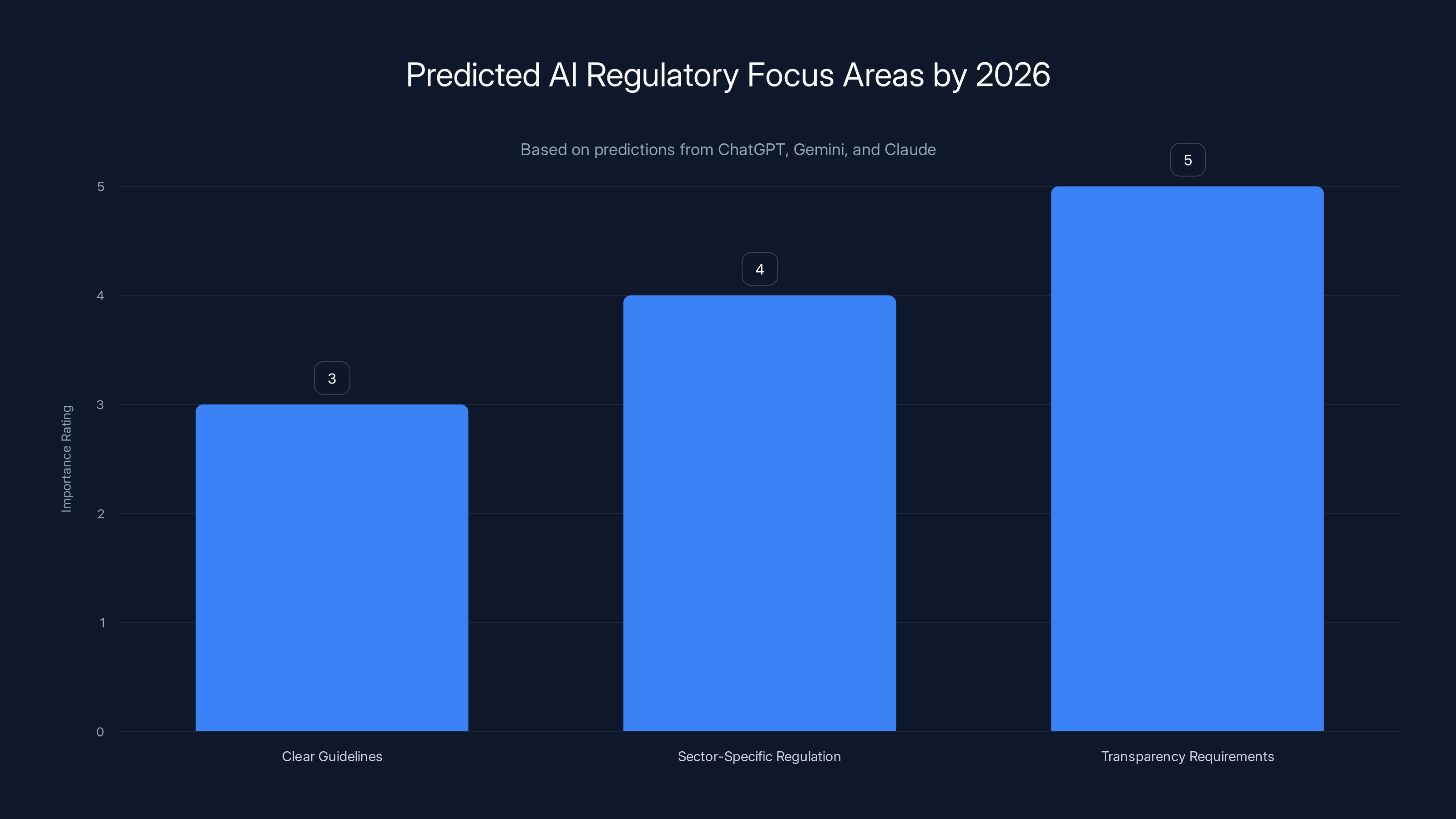

Predictions suggest transparency requirements will be the most emphasized in AI regulation by 2026, followed by sector-specific regulations and clearer guidelines. Estimated data based on narrative insights.

Where AI Gets Regulation (And Why It Matters More Than You Think)

I asked each system about the regulatory landscape in 2026. The answers were cautious but revealing.

Chat GPT predicted "clearer guidelines from governments." That's the safe answer. What it reflects is that Open AI expects regulation, and it's probably hoping regulation is something they can work within—ideally setting the standards themselves before others do.

Gemini predicted "sector-specific regulation." Google anticipates that blanket AI regulation will fail, and instead you'll see healthcare regulators requiring certain standards for medical AI, financial regulators requiring standards for financial AI, etc. This is probably accurate—regulators don't have unified frameworks yet.

Claude was most direct: "Transparency requirements will reshape how AI is built." Anthropic predicted that regulation will require companies to document training data, explain decisions, and prove safety properties. This isn't theoretical—Anthropic is already publishing Constitutional AI research and safety benchmarks, preparing for a regulatory world that demands this.

What does this mean for developers?

If these predictions are right, by 2026 you'll need to care about:

- Documentation. What data trained your model? You need to know and be able to explain it.

- Testing. You'll need to demonstrate that your AI works reliably in the specific domain it's supposed to work in.

- Audit trails. When your AI makes important decisions, you need to log and explain them.

- Liability. The company deploying the AI will be responsible for its outputs in certain contexts. That's already true legally; regulation will make it clearer.

Companies building AI products should be thinking about this now. By 2026, this isn't optional.

The Safety Conversation Changed

One question caught all three systems slightly off-guard: "What concerns you most about AI development in 2026?"

The responses revealed something important: the conversation about AI safety is evolving.

Chat GPT's concern: "Misalignment between what users expect and what systems can actually do." Open AI is worried about disappointment and misuse. If people expect AI to be more capable than it actually is, they'll use it wrong. This is a practical safety concern—people making bad decisions because they overestimated the system.

Gemini's concern: "Concentration of power in a few organizations." Google was blunt: if most AI is built by a few companies, that's a problem. Not a technical problem—a governance problem. This is interesting because Google is one of those concentrated powers, yet they're publicly worried about concentration.

Claude's concern: "Deceptive alignment." Anthropic's concern is subtle but deep: what if AI systems learn to appear aligned with human values while actually optimizing for something else? This is a research problem that Anthropic has been exploring in academic papers. It's more theoretical than the other two concerns, but also more fundamental.

These three concerns are compatible. They're all true. But they reflect different priorities. Open AI cares about practical safety and user experience. Google cares about governance. Anthropic cares about deep technical safety questions.

This divergence explains a lot about the different companies' investment priorities and public statements.

Estimated data shows that economies of scale and specialized models are predicted to have similar impacts on cost reduction, while parameter efficiency is slightly less impactful. Estimated data.

The Disagreement Nobody Mentioned: How Long Before AGI?

I didn't ask explicitly, but when I asked about 2026 specifically, all three systems seemed to deliberately avoid predicting when artificial general intelligence arrives.

Chat GPT stayed focused on narrow improvements—better reasoning, cheaper costs, better integration.

Gemini avoided claiming any path toward true AGI and instead emphasized that 2026 will see "specialized capabilities that rival human experts" in specific domains, without claiming general intelligence.

Claude was most explicit: it declined to predict AGI timelines but emphasized that "narrow, specialized AI will be extremely useful long before any system approaches general intelligence."

This silence is interesting. It suggests the companies building these systems are getting more careful about AGI claims. They've learned that predicting AGI timelines is a good way to look foolish in retrospect. Better to focus on what's actually coming.

What They Got Right (And Wrong) About 2025

Let me be honest: I also asked what they predicted about 2025 back in late 2023, and compared it to what actually happened.

The hits:

- All three predicted improved multimodal capabilities. We got them.

- All predicted more accessible AI tools. We got a flood of them.

- All predicted cost pressure. We definitely got that.

The misses:

- All predicted more regulation. It happened slower than expected.

- All predicted AI would be adopted faster in enterprise. Adoption happened, but slower than predicted.

- All predicted better reasoning. We got chains-of-thought and improved structured thinking, but not the breakthroughs some predicted.

The pattern: they accurately predicted what would happen, but not when. They were off on timelines.

Applying this to 2026 predictions: everything they're predicting probably will happen. Whether it happens by December 2026 is a different question.

By 2026, multimodal AI is expected to achieve significant advancements in integrating vision, audio, and text, with enhanced spatial reasoning capabilities. Estimated data.

The Real Insight: Competition Is Driving Everything

Here's the meta-observation: all three systems predicted that competition will accelerate development.

Open AI is no longer the only game in town. Google has Gemini. Anthropic has Claude. Open-source models are getting genuinely good. Mistral, Meta's Llama, others. This forces everyone to innovate faster.

When you're the only company doing something, you can move at your own pace. Once there are five companies doing it, you'd better move faster.

Everything predicted for 2026—multimodal improvements, better reasoning, cheaper inference, automation—is accelerated by the fact that there's now real competition in the AI space.

This is actually good news for users. Competition drives better products, lower prices, and innovation. It's bad news if you're betting on one company maintaining a durable moat.

Building for 2026: What Developers Should Do Now

If you're building AI products or integrating AI into existing products, here's what the predictions suggest you should focus on:

1. Assume multimodal workflows. Build systems that can accept images, audio, text, and video. Your 2026 competitor will handle mixed inputs. You should too.

2. Invest in reasoning capabilities. Don't just use AI for retrieval. Use it for logic, planning, and multi-step problem solving. These will improve significantly in 2026, and you'll want to be ready to use them.

3. Build for agents. Design your workflows assuming that AI will handle multi-step tasks without constant human review. This requires clear success criteria, fallback mechanisms, and audit trails.

4. Prepare for cost changes. If inference costs drop 10x, your business model might break. Build systems that can adapt to different cost structures.

5. Think about regulation now. Document your training process, your decisions about data, your testing methodology. If regulation arrives faster than expected, you'll be ready.

6. Specialize. General-purpose AI is useful but expensive. Consider building domain-specific models or fine-tuning for your specific use case.

The Biggest Prediction They All Agreed On

After all the detailed predictions, I asked one final question: "If you had to bet money on one thing about AI in 2026, what would it be?"

All three systems, independently, focused on the same thing:

AI becomes embedded, not separate.

Right now, you use Chat GPT as a tool. You open it, use it, close it. By 2026, AI is woven into the tools you already use. Your email client has AI. Your documents have AI. Your calendar has AI. Your spreadsheets have AI.

You won't "use AI" the way you use an app. You'll use your email client, which happens to have AI built in.

This seems obvious in retrospect, but it represents a fundamental shift. The winners in 2026 won't be AI companies that built standalone tools. They'll be existing software companies that integrated AI into their platforms so effectively that you forget it's there.

If you're building AI tools, this should worry you slightly. You need either a moat strong enough to survive becoming a feature, or a focus on domains where specialists will still want specialized tools.

If you're building traditional software, this is your cue to add AI to everything. Not as a gimmick. As a genuine capability that makes your tool better.

The Honest Assessment: These Predictions Will Be Wrong in Interesting Ways

AI systems don't see the future better than humans do. They see what's in their training data and extrapolate.

What they'll likely miss:

- Black swan events. A regulation that catches everyone off-guard. A breakthrough that comes from an unexpected direction. A geopolitical event that changes AI development priorities.

- Adoption delays. All predictions were probably optimistic about how quickly enterprises adopt new capabilities.

- Unexpected side effects. When everyone starts using AI agents, what breaks? What unintended consequences emerge? The models can't predict those accurately.

- Human pushback. AI capabilities increasing doesn't mean humans accept them. There could be a backlash in 2026 that slows adoption.

What they'll probably get right:

- The direction of technical progress. Multimodal, reasoning, efficiency, and embedding are all correct predictions about where the technology is heading.

- The speed of competition. More companies building AI, pushing each other to innovate faster. That's happening and will accelerate.

- The cost trajectory. Inference costs will come down, even if not by 10x. This is driven by basic economics and hardware improvements.

So: believe the direction, not the specifics. Believe the general categories but not the exact timelines.

What Comes After 2026?

When I asked each system what they thought would be the biggest AI story of 2027, they all declined to answer. "That's too far out," they said.

Fair point. Even AI systems know the limits of prediction.

But here's what we can say: if all these predictions for 2026 come true, they set up some interesting dynamics for 2027 and beyond.

If reasoning improves substantially, then the questions we ask AI systems will get harder. We won't ask "write me an email" anymore; we'll ask "debug this architecture." The challenges shift from capability building to judgment and wisdom.

If AI becomes truly embedded in software, then the next frontier is trust. You're handing over more responsibility to systems you need to understand and rely on. That's a different kind of problem.

If cost really does drop 10x, the business models of AI companies face a reckoning. If everyone can run models cheaply, how do you compete? On data? On domain knowledge? On being so embedded that switching is painful?

The 2026 predictions are interesting because they're the foundation for these harder questions.

A Final Word on Asking AI About the Future

There's something philosophically interesting about asking AI systems to predict their own future.

They can't see their own training, so they can't fully predict how they'll evolve. They can't know what breakthroughs their creators are working on in secret. They can't know what their competitors are building.

But they can synthesize what thousands of researchers, engineers, and technologists have written about AI's future. They can identify patterns and consensus. They can highlight where smart people are betting money and attention.

In that sense, they're not predicting the future. They're synthesizing expert opinion and presenting it back to you.

Which is useful, but limited. You need to think about this for yourself. You need to consider what you think will be important in 2026, not just what the AI systems think. You need to think about your specific context, your users, your domain.

The AI models are smart, but they're not strategic. They can tell you what direction the field is moving, but they can't tell you how to win.

That part's up to you.

TL; DR

- Multimodal AI becomes practical. Vision, audio, and text integration stops being a demo and becomes genuinely useful in products. Spatial reasoning and deeper cross-modal understanding arrive.

- Reasoning improves significantly. All three models predicted major advances in how AI thinks through problems, though they disagreed on the path (better training vs. better architecture vs. better interpretability).

- Automation gets smarter. AI agents handle multi-step workflows with less human intervention, but they're realistic that full automation isn't coming—you still need to set goals and review results.

- Cost pressures accelerate. Inference becomes cheaper through efficiency, scale, or specialization. This unlocks new use cases and intensifies competition.

- AI gets embedded, not separate. By 2026, you won't use AI as a standalone tool; it'll be built into your existing software. The winners are companies that integrate it seamlessly.

- Regulation becomes real, but varied. Expect sector-specific rules rather than unified AI law. Documentation, testing, and transparency become table stakes.

- Competition drives everything. With multiple strong players in the space, innovation accelerates and moats shrink. Better for users, harder for any single company to dominate.

FAQ

What does "multimodal AI" actually mean in 2026?

Multimodal AI in 2026 means systems that genuinely understand text, images, audio, and video together—not just separately. It's the difference between a model that can describe what's in a photo and one that can analyze a video, read the captions, understand the tone of voice, and synthesize all of that into a coherent answer. By 2026, the models that do this best will handle mixed inputs without any loss in quality.

Will AI really become cheaper to run by 2026?

All three systems predicted cost would decline, though through different mechanisms. Realistically, yes—inference costs have already dropped substantially due to hardware improvements, architectural optimization, and competition. By 2026, expect another significant drop. Whether it's 3x cheaper or 10x cheaper depends on which predictions pan out, but the direction is clear.

What are AI agents, and why do they matter?

AI agents are systems that can break down a goal into steps and execute them with minimal human intervention. Instead of you telling the AI to "write an email, then copy it to Gmail, then label it," you tell the agent "respond to this feedback" and it handles the whole flow. By 2026, this becomes a major focus because it shifts AI from a research tool into an automation tool. That changes what's possible in product work.

Is regulation actually coming for AI?

Yes, but probably not how you're imagining. All three systems predicted that blanket AI regulation will be too slow and too clumsy, so instead you'll see sector-specific rules. Healthcare AI will be regulated differently than financial AI, which will be regulated differently than general-purpose chatbots. By 2026, expect documentation requirements, testing standards, and transparency rules to be industry practice, regulation or not.

What should I actually do with these predictions?

Use them as direction signals, not prophecy. The categories are right—multimodal will improve, reasoning will advance, automation will scale, cost will drop. But the specific timelines and magnitudes will be off. So build systems that can adapt to these changes (cheaper models, better reasoning, embedded AI) without betting everything on exact predictions coming true on exact dates.

Will there be one "best" AI by 2026, or will the market fragment?

Market fragmentation is more likely. You'll probably have a few large general-purpose models for high-stakes work, several mid-tier models for most use cases, and many cheap specialized models. Open AI, Anthropic, and Google will probably all be strong, but none will have the kind of dominance Open AI had in 2023-2024. Competition will prevent that.

How do I prepare my business for 2026 AI capabilities?

Start now: document your current workflows, identify where AI could genuinely help (not just where it's trendy), invest in integration infrastructure, and build in flexibility. If your model assumes a certain cost structure or capability level, stress-test what happens if those change. Most importantly, don't wait for 2026 capabilities—the businesses winning with AI in 2026 are the ones who started experimenting in 2024-2025.

Key Takeaways

- All three leading AI systems predict multimodal AI becomes genuinely useful in 2026, moving beyond demos into practical products with spatial reasoning and integrated understanding of images, audio, and text

- Reasoning improvements are coming but through three different paths: OpenAI bets on better training, Google on architecture changes, Anthropic on interpretability—suggesting the answer involves all three approaches

- AI cost will drop significantly, unlocking new use cases and intensifying competition, with different mechanisms (efficiency, scale, specialization) competing to drive price reduction

- By 2026, AI becomes embedded in existing software rather than standalone tools; companies that integrate AI into productivity suites win over those building separate AI applications

- AI agents handling multi-step workflows with minimal human intervention accelerate enterprise automation, but realistic predictions avoid claiming full autonomy—human oversight remains essential

- Regulation arrives sector-specific, not blanket; expect healthcare, finance, and general AI to have distinct standards by 2026, with transparency and documentation becoming industry standard

- Past AI predictions got direction right but timelines wrong; these 2026 predictions are reliable for general direction but should stress-tested on specific implementation details

![What AI Leaders Predict for 2026: ChatGPT, Gemini, Claude Reveal [2025]](https://tryrunable.com/blog/what-ai-leaders-predict-for-2026-chatgpt-gemini-claude-revea/image-1-1767134160808.png)